Google dropped Veo 3.1 this week. The new AI video model makes it even harder to tell what’s real online.

The upgrade brings better audio, sharper realism, and improved prompt accuracy. Plus, it works in both landscape and portrait formats now. So expect AI-generated videos flooding TikTok and YouTube Shorts very soon.

But here’s the real concern. These tools keep getting better while detection methods lag behind. And Google’s pushing them everywhere across its ecosystem.

What Makes Veo 3.1 Different

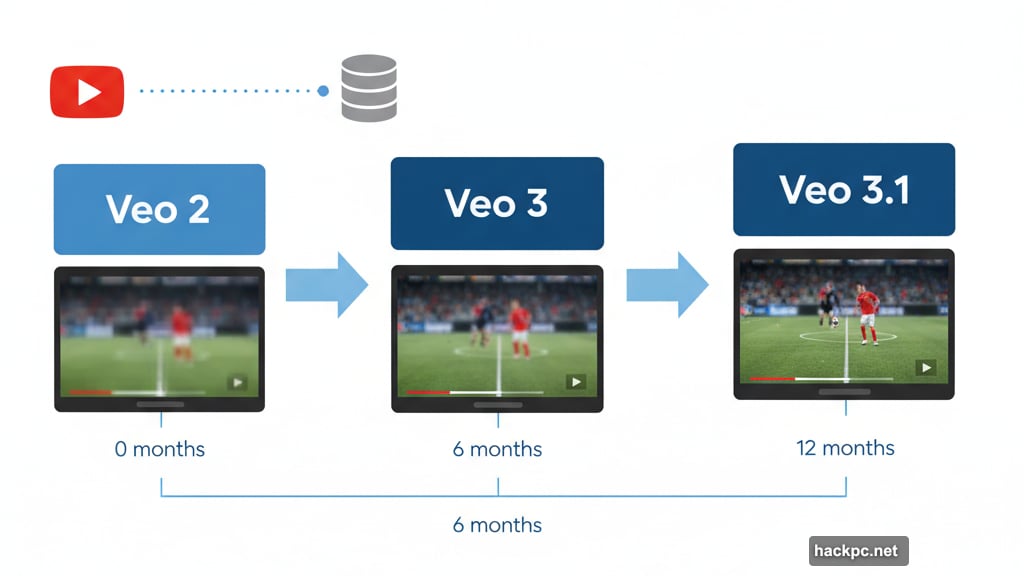

Google’s throwing its entire YouTube library at AI training. That massive video database gives them an edge over competitors. In fact, Veo jumped from version 2 to 3 in just months, showing staggering quality improvements.

Now version 3.1 arrives with several key upgrades. Better prompt adherence means fewer wasted attempts and more accurate outputs. The AI actually understands what you’re asking for instead of producing random interpretations.

Audio got a boost too. Veo 3 already made waves with generated sound. But 3.1 supposedly sounds even more realistic. That matters because audio sells the illusion as much as visuals do.

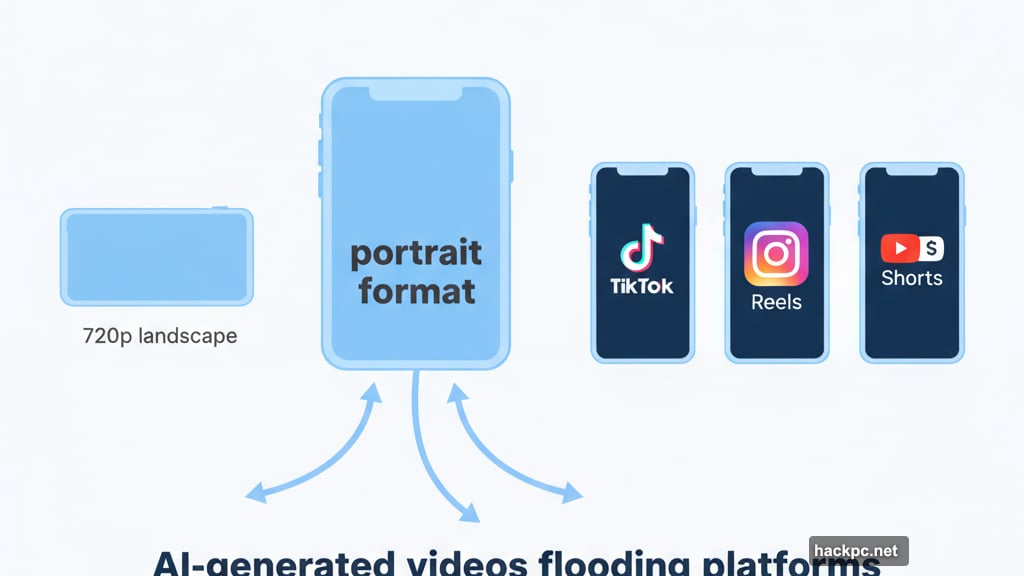

Moreover, the model now handles vertical video. Previous versions only produced 720p landscape output. That limitation kept AI videos off platforms like TikTok and Instagram Reels. Not anymore.

Portrait Format Opens Dangerous Doors

Vertical video support changes everything. TikTok dominates social media with short-form vertical content. YouTube Shorts competes directly in that space.

Google previously promised Veo integration with YouTube Shorts. The portrait format capability makes that promise realistic now. So we’ll probably see AI-generated Shorts flooding the platform soon.

Meanwhile, TikTok already struggles with misinformation and deepfakes. Adding high-quality AI video tools that actually fit the format? That’s gasoline on a fire.

Plus, vertical video costs less to produce at scale. Content farms will churn out thousands of AI clips optimized for mobile screens. Good luck telling what’s authentic.

Filmmaking Tools Get More Powerful

Veo powers Google’s Flow filmmaking tool. The 3.1 update unlocks several new capabilities there. You can now upload reference images and add custom audio simultaneously.

The Ingredients to Video feature lets you combine multiple images as references. Frames to Video uses images as starting or ending points. Both now support generated audio tracks.

But the precision editing features worry me most. Veo 3.1 can add objects to existing video while keeping everything else unchanged. Or remove elements without affecting the rest of the scene.

That means subtle manipulation of real footage. Not just creating fake videos from scratch. Instead, editing authentic clips in ways that hide all traces of modification.

Object removal comes to Flow soon. Object addition works right now in Flow and the API. So developers can already build apps around these capabilities.

Fast Variant Cuts Costs Dramatically

Google introduced Veo 3.1 Fast alongside the standard model. The Fast variant reduces compute costs per generation. That matters when you’re paying per token through the API.

Lower costs enable more experimentation. Content creators can iterate faster without budget constraints. Plus, casual users in the Gemini app probably get more Fast generations included.

However, cheaper AI video generation has downstream effects. More people will create more content at lower cost. That floods platforms with AI-generated material. Detection becomes nearly impossible at scale.

Moreover, the Gemini app accepts reference images for Veo outputs. So mobile users can snap a photo and generate video variations instantly. No specialized tools required.

The API access means third-party apps will proliferate. Expect standalone video generation apps popping up everywhere. Each one lowering the barrier to creating convincing fakes.

Racing Against OpenAI’s Sora

This release keeps Google competitive with OpenAI. Sora recently launched on iPhone with impressive quality improvements. Now Google counters with Veo 3.1 just weeks later.

The competition benefits users in some ways. Better tools. More features. Lower costs. But it also accelerates the timeline for sophisticated AI video becoming ubiquitous.

Neither company seems interested in slowing down. Instead, they’re racing to add features and expand distribution. Meanwhile, society lacks frameworks for handling widespread synthetic media.

Plus, YouTube’s massive scale amplifies the impact. Google controls both the AI tools and a dominant video platform. That vertical integration creates unique challenges for verification and trust.

OpenAI focuses on creative applications and filmmaking use cases. Google’s pushing Veo everywhere across its ecosystem. The difference in distribution strategy matters enormously.

What This Means for Online Trust

Every video you watch online now carries doubt. Is it real? Edited? Completely generated? You can’t tell anymore just by watching.

Veo 3.1’s quality makes visual inspection useless. The audio sounds authentic. The motion looks natural. Lighting and shadows behave correctly. Nothing obviously screams “fake” at first glance.

Detection tools exist but lag far behind generation capabilities. By the time verification methods catch up, models improve again. It’s an unwinnable arms race.

Moreover, most people won’t bother verifying content anyway. They’ll scroll through feeds accepting everything at face value. That creates massive opportunities for manipulation and misinformation.

Social platforms struggle with existing deepfake problems. Adding tools that generate convincing vertical video at scale? They’re not prepared for that flood.

The Skeptical Eye You’ll Need

Vertical videos on TikTok and YouTube Shorts deserve extra scrutiny now. Look for subtle inconsistencies in motion or lighting. Check if audio matches visual perfectly.

But honestly? That’s exhausting. Nobody has time to forensically analyze every clip. So most AI-generated content will pass unnoticed.

Remember that reference images enable style matching. Someone can generate videos that look like they came from specific creators. Impersonation becomes trivially easy.

Plus, precision editing lets people modify real footage subtly. Not obvious fakes. Just small changes that alter meaning or context. Those manipulations are nearly impossible to detect.

We need better solutions than individual vigilance. Platform-level verification. Mandatory watermarking. Something that scales. But those solutions don’t exist yet while the tools keep improving.

The gap between what’s possible and what’s detectable keeps widening. And Google just made it wider with Veo 3.1.

Comments (0)