Photoshop added a bunch of AI features this year. Some are genuinely useful. Others feel like they exist just so Adobe can say “we have AI.”

I spent weeks testing every new generative tool Adobe crammed into Photoshop. My goal was simple: figure out which ones actually speed up real work and which ones are just marketing fluff.

Here’s what I learned. Plus, exactly how to use the features that don’t suck.

Generate Images Without Leaving Photoshop

Adobe’s Firefly AI now lives directly inside Photoshop. That means you can create images mid-project without bouncing between apps.

The process is straightforward. Open your project and look for the “Generate image” option in the contextual taskbar. You can also find it under Edit or by clicking the sparkle icon on the left toolbar.

Type your prompt, pick a style, and hit generate. Photoshop gives you multiple variations to choose from. Use the arrows below to tab through options.

Now, here’s the catch. Photoshop makes you agree to their AI terms before you can use any of this. Translation: don’t make anything illegal or horrible. Adobe’s lawyers insisted on that part.

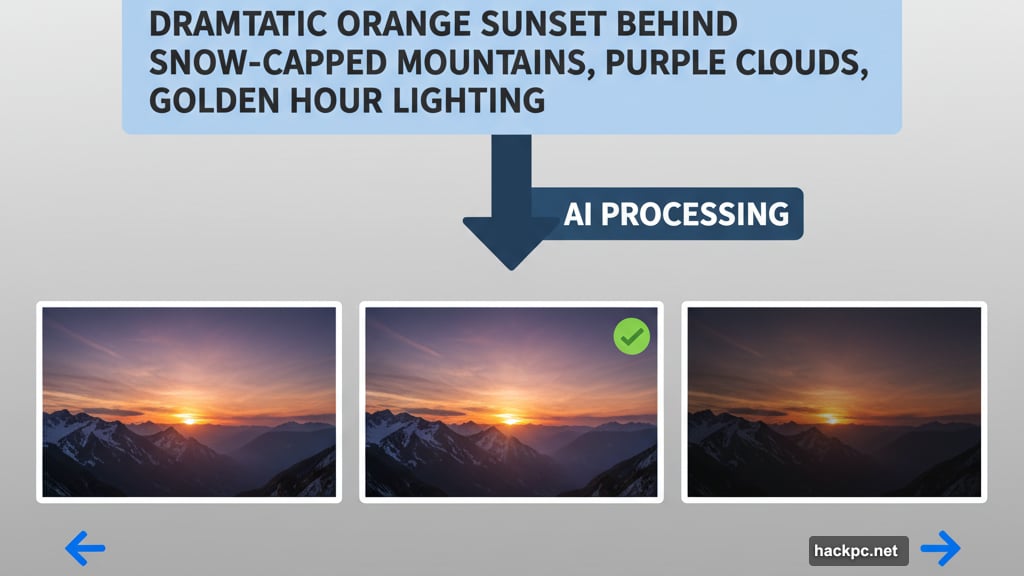

Writing Better Prompts Gets Better Results

Generic prompts produce generic images. Be specific. Put the most important elements at the beginning of your prompt.

For instance, “sunset over mountains” is weak. Try “dramatic orange sunset behind snow-capped mountains, purple clouds, golden hour lighting” instead. More detail gives the AI more direction.

If you’re not happy with the results, start over with a new prompt. Don’t waste time endlessly tweaking and regenerating. I learned this the hard way after spending 30 minutes trying to perfect one image.

Reference images help too. Upload a photo that captures the mood or composition you want. The AI uses it as a guide.

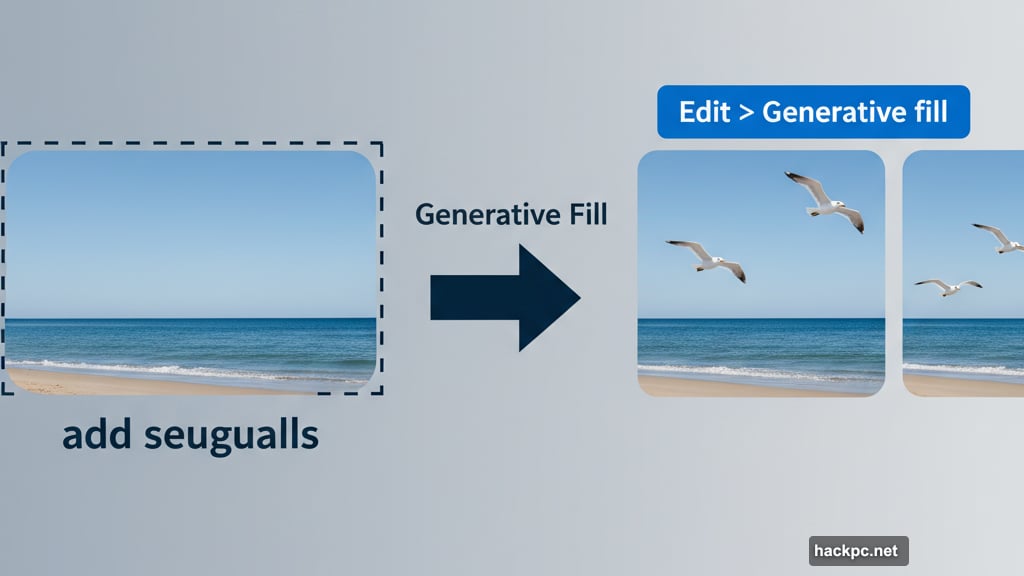

Generative Fill Actually Saves Time

This one’s legit. Generative fill lets you select part of your image, describe what you want there, and the AI fills it in.

Find it under Edit > Generative fill. Use the selection brush to mark where you want new elements. Type your prompt and click generate.

I used this to add seagulls to a beach photo. The AI matched the lighting and perspective perfectly. Way faster than hunting for stock photos or drawing them manually.

But generative fill works best for natural elements like clouds, plants, or simple objects. It struggles with complex technical subjects or text.

Expand Your Canvas Intelligently

Generative expand solved a problem I hit constantly. Sometimes you need more space in an image but cropping would cut off important elements.

Select the crop tool and drag your canvas bigger. Then click generate. The AI fills the new space by extending your existing image seamlessly.

You can guide it with prompts. I added more sky and sand to a beach shot by typing “blue sky and sandy beach.” The AI blended it naturally with the original photo.

This beats manually cloning and patching edges. Plus it maintains consistent lighting and texture throughout the expanded areas.

Remove Objects Like They Were Never There

Generative remove is an AI-powered eraser on steroids. It deletes objects while intelligently filling the gap.

Two ways to use it. Select an object with the object select tool, click generative fill, and type “remove.” Or use the remove tool to manually highlight what you want gone.

I removed a trash can from a landscape photo. The AI reconstructed the grass and rocks behind it perfectly. No obvious patches or clone stamp artifacts.

However, it occasionally creates weird textures when removing large complex objects. Works best on smaller distractions like power lines, signs, or photobombers.

Sky Replacement Adds Drama Instantly

This tool swaps boring skies for dramatic ones. Navigate to Edit > Sky replacement and pick from presets like sunsets, blue skies, or “spectacular” options.

I replaced a plain white sky in a stadium photo with vivid Carolina blue. The AI automatically adjusted the lighting on the building to match the new sky.

But here’s the limitation. The effect looks best on landscapes and architecture. It can appear fake on photos with complex foreground elements or reflections.

After choosing a preset, you can manually tweak brightness and other settings. Spend a minute adjusting to make it look natural.

Generate Backgrounds for Product Shots

This feature works great for product photography. Upload your image, click “remove background” from the contextual taskbar, then hit “generate background.”

Solid colors and simple patterns came out fantastic. However, AI-generated cityscapes or complex scenes looked obviously fake.

I used this for mock product photos. The AI created clean, professional-looking backgrounds in seconds. Much faster than shooting multiple setups.

For e-commerce or quick mockups, this tool delivers real value. For high-end commercial work, you’ll probably still want to shoot backgrounds properly.

Neural Filters and Other Specialty Tools

Photoshop includes additional AI features for specific tasks. Neural filters handle detailed photo editing like skin smoothing or style transfers.

The curvature pen tool helps designers create consistent arcs. It’s subtle but genuinely improves precision for logo work or technical illustrations.

I didn’t use these as much as the generative tools. But if your work involves frequent portrait retouching or precise vector shapes, they’re worth exploring.

Adobe keeps adding more AI tools throughout the year. So this list will expand as new features roll out.

Which Tools Actually Matter

Not every AI feature deserves your attention. Here’s what I’d focus on first.

Generative fill and remove are the most useful for everyday work. They genuinely speed up common tasks like adding elements or cleaning up photos.

Generative expand solves a specific problem elegantly. If you frequently need to resize or extend images, this tool pays for itself quickly.

Sky replacement and generate background work well for their intended purposes. Just understand their limitations and use them appropriately.

The actual Firefly image generator inside Photoshop is convenient but not essential. You can access the same model through Adobe’s separate Firefly app.

The Honest Assessment

Photoshop’s AI tools performed better than I expected. Adobe clearly put real engineering work into making them useful, not just slapping “AI-powered” labels on mediocre features.

That said, these tools work best when you understand their specific use cases. Generative expand excels at resizing. Generative remove handles small distractions. Sky replacement adds drama to landscapes.

Try to use them for everything and you’ll be disappointed. Use them strategically for the right tasks and they’ll genuinely improve your workflow.

I won’t use AI for every Photoshop project. But for certain jobs, these tools now live in my regular toolkit. That’s more than I can say for most “AI-powered” software gimmicks.

The key is knowing when AI helps and when traditional tools work better. Test these features on your actual projects. You’ll quickly figure out where they fit in your process.

Comments (0)