Adobe just wrapped its annual Max conference. And this year’s announcements hit different.

Instead of flashy demos that never ship, Adobe launched real tools creators can use today. We’re talking AI assistants that edit entire projects, audio generators that score videos automatically, and third-party AI models integrated directly into Photoshop. Plus, Adobe’s finally building the kind of AI agents that might actually save time instead of creating more work.

Let’s break down what matters and what’s just noise.

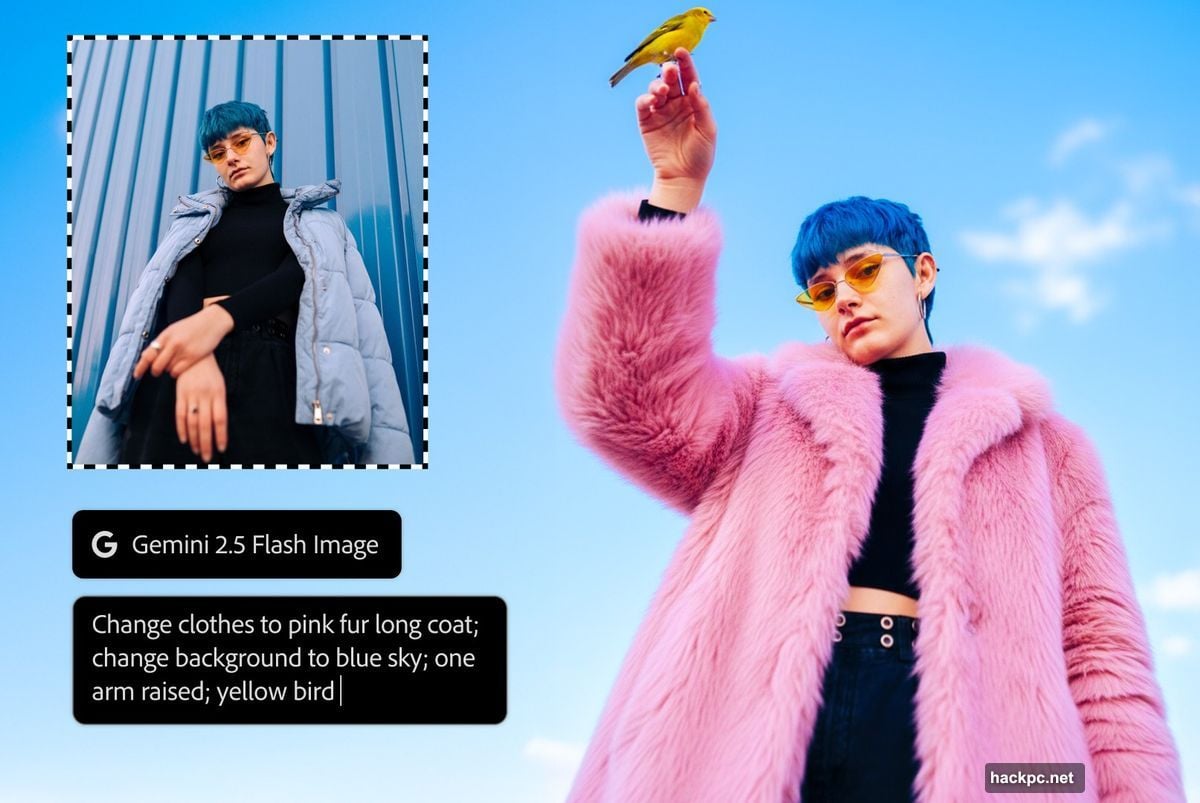

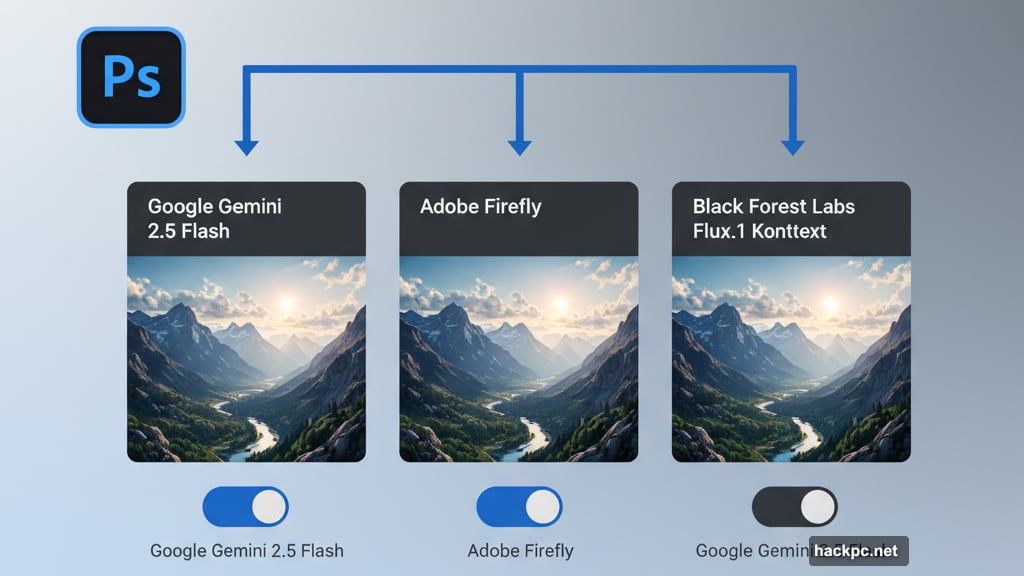

Photoshop Now Lets You Pick Your AI Model

Here’s something genuinely useful. Photoshop’s Generative Fill feature can now tap into Google’s Gemini 2.5 Flash and Black Forest Labs’ Flux.1 Kontext models.

Why does this matter? Different AI models excel at different tasks. Maybe Firefly nails landscapes but struggles with faces. Now you can try all three models and pick the best result. That’s actual flexibility instead of being locked into one company’s AI.

The workflow stays simple. Select an area, describe what you want, then toggle between models. Each generates different variations. So you get more options without learning new tools or switching apps.

Adobe’s betting that creators want choice over simplicity. And honestly, they’re probably right. Anyone serious about their work will take three decent options over one perfect shot that might not hit.

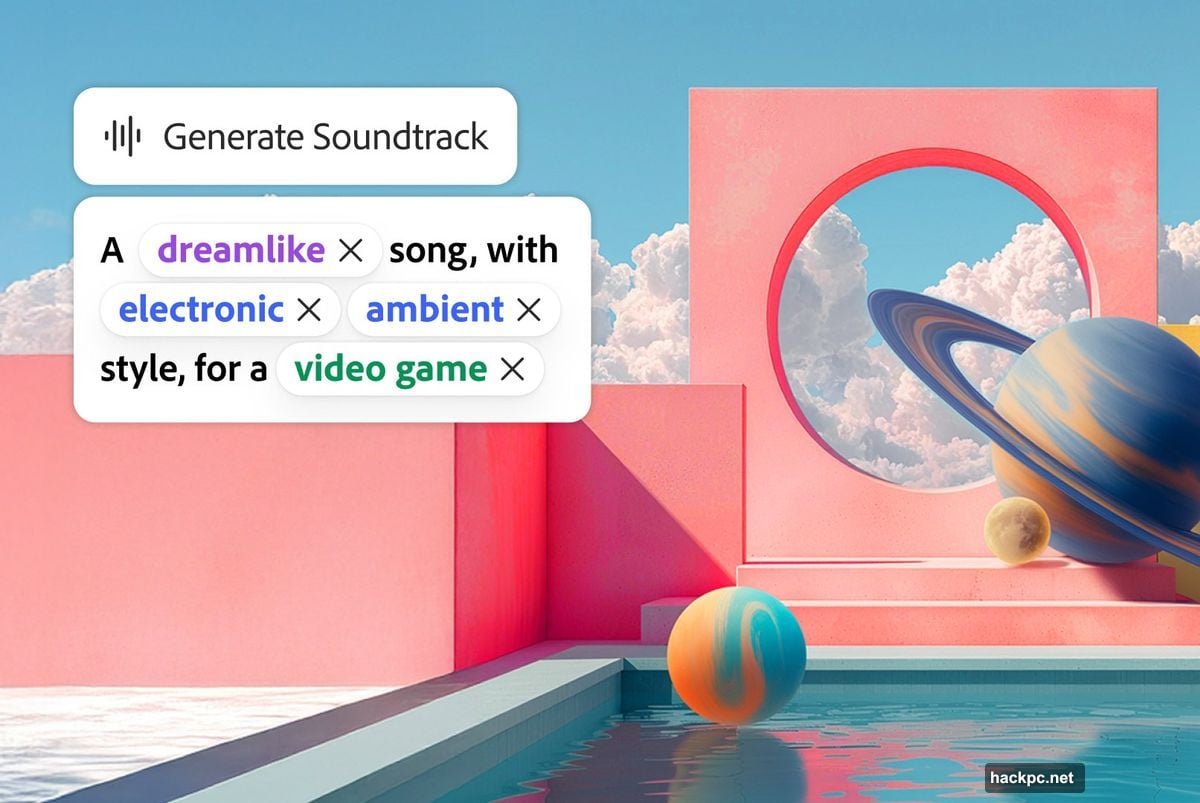

AI Audio Tools That Actually Work With Video

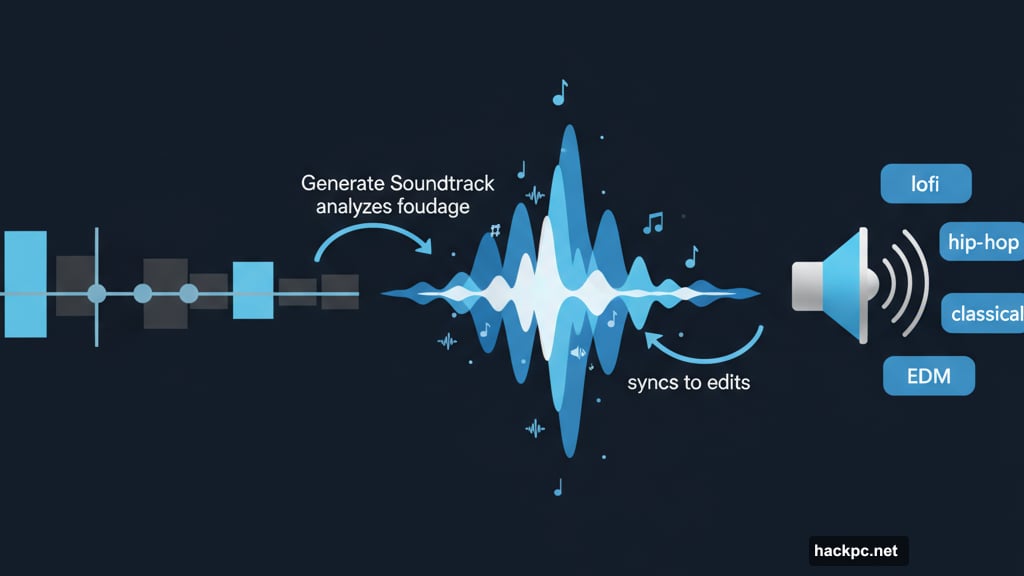

Adobe launched two audio generators at Max 2025. Generate Soundtrack and Generate Speech handle the tedious parts of video production.

Generate Soundtrack analyzes your footage and creates instrumental backing tracks that sync to your edits. You pick a style preset—lofi, hip-hop, classical, EDM—or describe the vibe in plain English. The AI watches your video and generates music that matches the mood and pacing.

Meanwhile, Generate Speech creates voiceovers without recording studios or expensive equipment. Describe what you need, and the tool generates narration that fits your project. Both features launched in public beta inside the redesigned Firefly app.

For video creators grinding out content daily, these tools solve real problems. Scoring videos takes hours. Finding royalty-free music that actually fits is harder. So automated soundtracks that sync to your footage? That’s legitimately helpful.

Plus, the quality matters less than you’d think. Background music for social content doesn’t need Grammy-level production. It just needs to not distract from the video. And AI can absolutely clear that bar.

Adobe Express Gets an AI Assistant That Understands Design

Adobe Express now has a conversational AI assistant that edits projects through natural language. Toggle it on, describe what you want, and the AI makes design changes without touching specific tools.

The interface replaces Express’s usual homepage with a chatbot. Ask for “fall-themed wedding invitation” or “retro-inspired poster for school science fair” and you get curated presets to start from. Then refine everything with plain English prompts.

During the live demo, things got weird. The presenter asked the AI to add a vampire costume to a raccoon photo. The result? A cartoon version nobody requested. She laughed it off. But that kind of unpredictability shows how far these tools still need to go.

Still, the concept makes sense. Most people don’t know design terminology or software shortcuts. They just want to make something that looks decent. An AI that translates “make this more Halloween-y” into actual design changes? That lowers the barrier significantly.

Project Moonlight Wants to Manage Your Entire Social Presence

Adobe showed off Project Moonlight, an AI agent that acts as creative director for social media campaigns. This one’s still in development but signals where Adobe’s headed.

The tool integrates with your existing social channels and Adobe’s creative apps. You describe campaign ideas to the chatbot. Then Moonlight processes those concepts through Adobe’s other AI tools to generate images, videos, and social posts that match your brand voice.

Think of it as a centralized AI coordinator. Instead of jumping between apps and manually styling each asset, you tell one AI agent what you need. It handles the execution across multiple tools while maintaining consistency.

Whether this actually works remains to be seen. Managing brand voice and visual identity across platforms is genuinely hard. AI that can do it reliably would save hours of work. But AI that gets it wrong would create more problems than it solves.

Adobe’s clearly betting big on AI agents that operate across their entire ecosystem. Project Moonlight represents that vision. Now we wait to see if the execution matches the promise.

The Premiere Pro Update Nobody’s Talking About

Hidden in the announcements was news about Premiere editing tools coming to YouTube’s platform. Adobe’s building a Create for YouTube Shorts hub that works inside both the new Premiere mobile app and YouTube itself.

This matters more than it sounds. Most creators edit shorts directly on their phones using basic tools. Bringing professional Premiere features to mobile and integrating them into YouTube’s platform makes those capabilities accessible where creators actually work.

YouTube Shorts is huge for reaching audiences. But editing on mobile often means compromising on quality or spending hours tweaking details. Professional tools that work natively in that workflow could genuinely improve output without adding friction.

Adobe didn’t share a launch timeline. But this partnership signals how creative software companies are adapting to where content gets made today. And spoiler: it’s mostly not on desktop workstations anymore.

What This Actually Means for Creators

Adobe’s Max announcements show a company trying to make AI genuinely useful instead of just impressive. The tools launched today solve real workflow problems rather than demonstrating technical capabilities nobody asked for.

Multi-model Generative Fill gives creators options. Automated audio tools handle time-consuming busy work. AI assistants lower barriers for people without formal training. And cross-platform integrations meet creators where they actually work.

But the real question is whether these features work consistently enough to trust. AI tools that nail it 80% of the time still require constant supervision. That’s not actually time saved. It’s just different work.

So the next few months will reveal if Adobe’s latest AI push delivers on its practical promises or joins the pile of half-baked features nobody uses. Based on what we’ve seen, there’s reason for cautious optimism. These tools target real problems. Now we’ll see if the execution holds up under actual use.

Comments (0)