YouTube just launched a tool to fight AI deepfakes. Sounds great. Except it only protects your face, not your voice.

The platform’s new likeness detection feature started rolling out to YouTube Partner Program members this week. It scans uploaded videos for unauthorized AI-generated copies of people’s faces. But voice cloning? That’s not covered yet.

So if someone uses AI to make you say something you never said, you’re out of luck. The tool won’t catch it.

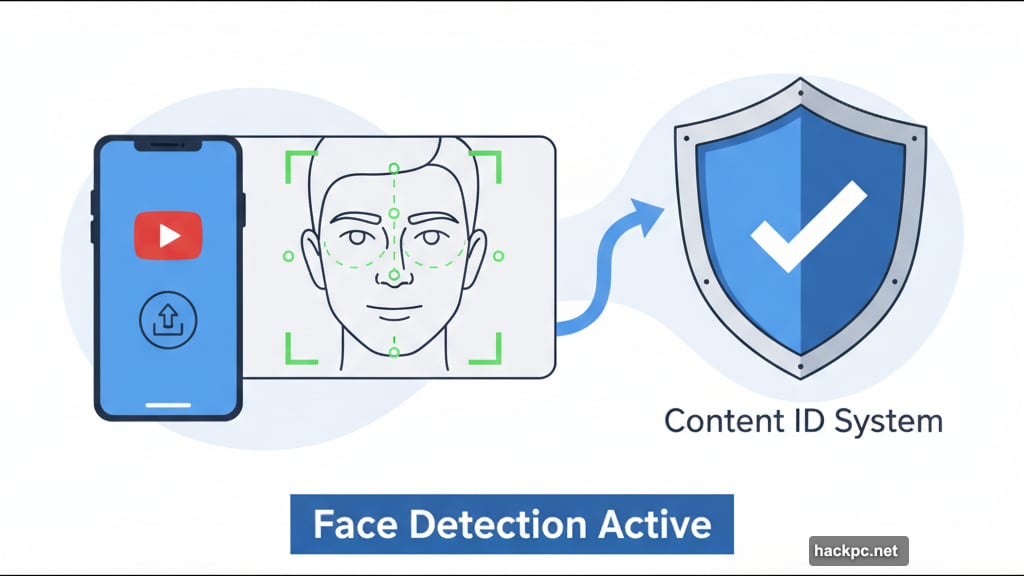

How Face Detection Actually Works

YouTube’s system works similarly to Content ID, their copyright protection tool. You submit a government ID and record a brief video selfie. This proves your identity and gives the system reference material.

Then the tool monitors new uploads across the platform. When it spots a potential match, you get an alert. You review the flagged video and decide whether to request removal.

It’s essentially facial recognition meets content moderation. The technology scans frames looking for faces that match your biometric data. Pretty straightforward in concept.

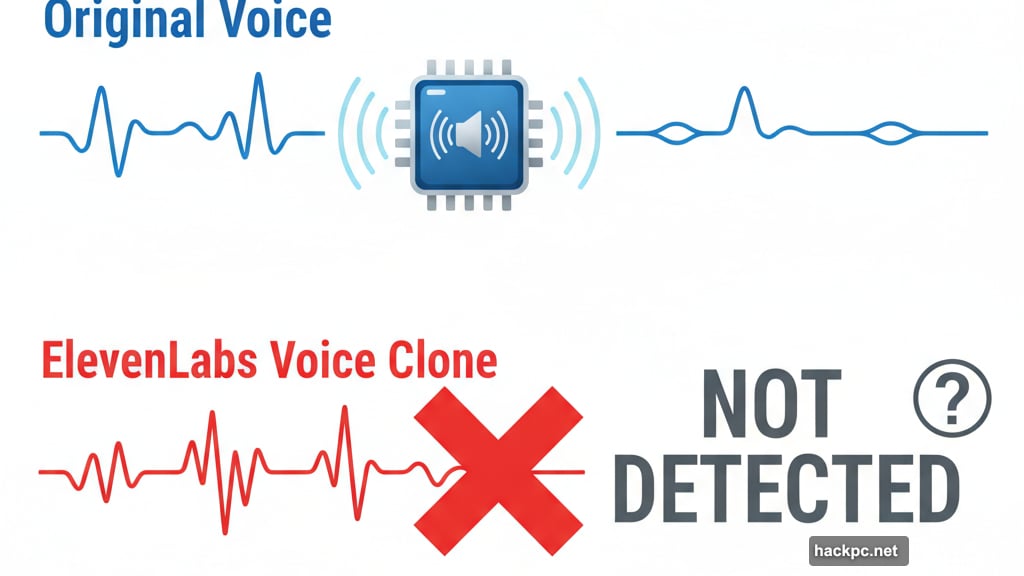

The Voice Problem Nobody’s Solving

Here’s where things get messy. Voice cloning has exploded in the past year. Tools like ElevenLabs and others can replicate someone’s voice from just a few seconds of audio.

Plus, voice deepfakes often cause more damage than face swaps. Someone could make you endorse products you hate. Spread misinformation using your vocal identity. Create fake customer service calls in your voice.

YouTube acknowledges this limitation. They specifically note the tool “may not be able to catch” videos where only voice has been modified by AI. That’s a huge gap in protection.

Moreover, detecting voice manipulation proves harder technically than catching face swaps. Faces have consistent geometric features. Voices vary based on microphone quality, compression, and recording environment.

Who Gets Protection First

Only YouTube Partner Program members can access likeness detection right now. That means you need at least 1,000 subscribers and 4,000 watch hours to qualify.

What about everyone else? Too bad. If you’re not in the partner program, you can’t use this tool yet. YouTube hasn’t announced plans to expand access.

This creates a two-tier system. Established creators get deepfake protection. Smaller channels and ordinary people remain vulnerable. That seems backwards since less prominent people probably face more risk with fewer resources to fight back.

The Verification Burden

Using this feature requires submitting a government ID and video selfie. That makes sense for preventing abuse. But it also creates friction.

Some people won’t feel comfortable uploading government documents to YouTube. Privacy concerns are legitimate. Plus, people in certain countries may lack the specific ID types YouTube accepts.

The video selfie requirement adds another hurdle. You’re essentially giving YouTube biometric data. That data needs secure storage and handling. One breach could expose facial recognition profiles for thousands of creators.

Still, verification seems necessary. Without it, bad actors could claim ownership of other people’s faces and abuse the takedown system. It’s a tough balance.

How This Compares to Nothing

Before this tool existed, creators had basically zero recourse against deepfakes. You could manually report videos. Maybe send a DMCA takedown if you could prove copyright infringement. But no automated detection existed.

So this represents progress. Imperfect progress, but progress nonetheless. Having facial deepfake detection beats having nothing at all.

However, YouTube’s rolling this out three years after ChatGPT launched and AI tools went mainstream. They’re playing catch-up. Deepfake technology advanced faster than platform protections.

And with tools like Sora making video generation even easier, the arms race continues. YouTube adds detection. Bad actors find ways around it. The cycle repeats.

What Actually Needs to Happen

Voice detection needs to launch fast. Face-only protection solves maybe half the deepfake problem. Audio manipulation often causes equal or greater harm.

Plus, YouTube should expand access beyond partner program members. Deepfakes don’t discriminate by subscriber count. Everyone deserves protection.

Finally, the platform needs proactive detection, not just reactive takedowns. Scanning uploaded videos catches deepfakes after they’re public. Better to flag suspicious AI-generated content before it goes live.

Other platforms face similar challenges. TikTok, Instagram, Twitter, they all struggle with AI-generated impersonation. YouTube’s solution might inform industry standards. Or it might prove inadequate and force better alternatives.

YouTube took a step forward. But they’re still playing defense in a game where offense keeps getting more powerful. Creators gained a tool. Whether it’s enough remains to be seen.

The deepfake problem isn’t going away. It’s accelerating. Platform tools need to accelerate faster.

Comments (0)