Roblox just dropped a bombshell on its youngest users. Starting now, every child who wants to chat with friends needs to either upload an ID or scan their face with a selfie.

No exceptions. No workarounds. And for a platform built on social interaction, that’s a massive change affecting tens of millions of children worldwide.

The rollout starts in Australia, New Zealand, and the Netherlands this December. But by early 2025, every Roblox user globally faces the same requirement. Plus, the company’s betting everything on facial recognition technology that’s never been tested at this scale on children.

Face Scans Replace Traditional Age Verification

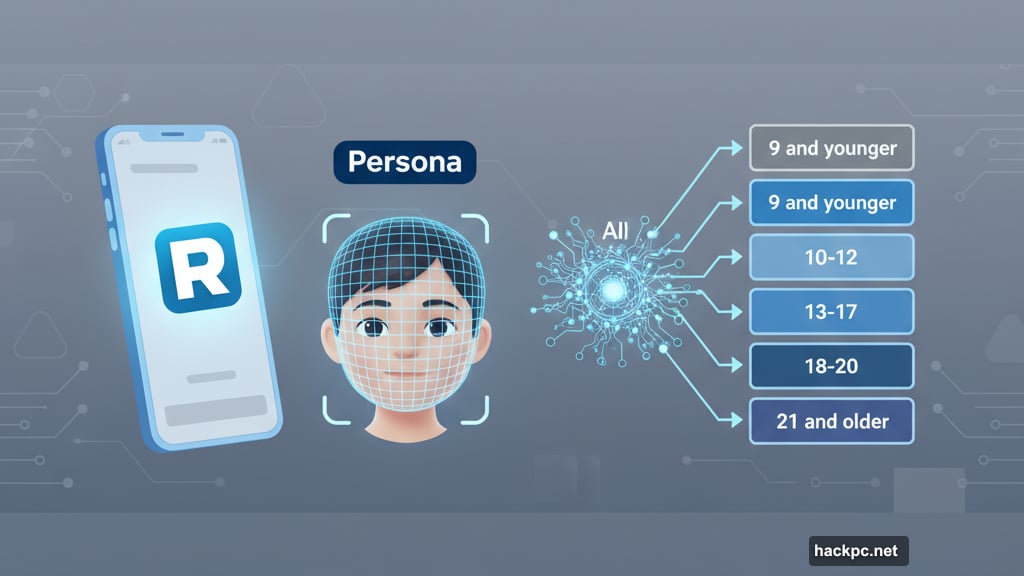

Roblox partnered with identity verification company Persona to implement what they call “age estimation” technology. The system works through video selfies captured directly in the Roblox app.

Here’s how it functions. Users open the app and point their camera at their face. The AI analyzes facial features to guess their age within a two-year range. Then the system assigns them to one of six age groups, from “9 and younger” to “21 and older.”

Roblox claims it deletes face scan images immediately after processing. But that’s a tough sell to parents already nervous about AI and data privacy. Indeed, Chief Safety Officer Matt Kaufman admitted the technology works best for users between 5 and 25 years old, calling it “pretty accurate” within one or two years.

That margin of error matters. A 12-year-old incorrectly classified as 14 gets different chat permissions. Meanwhile, a 9-year-old tagged as 11 accesses content designed for older kids.

Age-Based Chat Locks Kids Into Narrow Groups

Beyond verification, Roblox introduced “age-based chat” restrictions that fundamentally change how young users interact on the platform.

Once the system assigns an age group, it limits who kids can talk to in-game. Teens and children can only chat with peers in their specific age bracket or adjacent groups. So a 10-year-old can’t message a 15-year-old, even if they’re siblings or friends in real life.

This approach differs dramatically from other platforms. Most social media requires users to be 13 or older. But Roblox actively welcomes younger children, creating unique safety challenges that age gating attempts to solve.

Moreover, the restrictions extend beyond chat next year. Roblox plans to limit access to external social media links and participation in Roblox Studio based on verified ages. That effectively walls off younger users from significant chunks of the platform’s functionality.

Lawsuits Paint a Different Picture Than PR Statements

Roblox frames these changes as proactive safety measures. The reality looks messier.

Three states—Texas, Louisiana, and Kentucky—filed lawsuits against Roblox this year. The accusations? Failure to prevent adults from targeting minors on the platform. Those aren’t isolated complaints. They represent a pattern of alleged safety lapses despite years of announced improvements.

The company released numerous child safety updates recently. Yet the legal actions suggest those efforts haven’t adequately protected users. So this mandatory verification push feels less like innovation and more like damage control.

Furthermore, forcing face scans on millions of children raises questions Roblox hasn’t fully addressed. What happens when the AI gets ages wrong? How do families without IDs or smartphones comply? What about privacy concerns in regions with strict data protection laws?

The Privacy Trade-Off Nobody Requested

Here’s what bothers me most. Roblox built an empire by letting kids create, play, and socialize freely. Now it’s demanding biometric data from its youngest users to maintain that access.

Yes, child safety matters enormously. But there’s something deeply uncomfortable about normalizing facial recognition scans for 7-year-olds. We’re teaching an entire generation that surrendering biometric data is the price of digital participation.

The company promises immediate deletion of face scan images. Yet once that data enters their systems, even briefly, risks emerge. Data breaches happen. AI models trained on children’s faces raise ethical concerns. And the precedent this sets feels dangerous.

Other platforms will watch Roblox’s rollout closely. If this works without major backlash, expect similar requirements elsewhere. Soon, scanning your child’s face might become standard practice for accessing any online service.

That future arrives in January for most users. Families in Australia, New Zealand, and the Netherlands face it next month. So millions of parents now need to decide whether their kids’ social connections are worth the biometric trade.

Comments (0)