Generative AI promised to kill image editing software. Two years in, I’m still opening Photoshop every single day.

That’s not because I’m a Photoshop wizard. Far from it. My skills barely scratch the surface of what Adobe’s flagship software can do. Yet even my basic manual edits consistently beat what AI generates.

The gap between AI hype and AI reality remains shockingly wide. Let me show you why.

AI Excels at Throwaway Work

Generative AI does shine in specific scenarios. Quick concept art for tabletop RPG sessions? Perfect. Prototype card layouts for game design? Sure. Digital props that nobody will scrutinize closely? Absolutely.

I’ve used AI to create fake sci-fi terminal overlays for my Alien RPG campaign. Players get phony corporate messages displayed on imaginary tablets. Does the text look slightly off? Do the fingers have weird proportions? Nobody cares. It’s a fun prop that lasts five seconds of screen time.

Photoshop’s Generative Fill works great as a quick healing brush replacement too. Need to extend an image background by a few inches? Fill it in. Want to remove a distracting element from a photo? Done in seconds.

But that’s where AI’s usefulness stops for me. These tools work for disposable content where precision doesn’t matter. The moment I need accuracy, AI falls apart completely.

The Finger Problem Exposed Everything

One tabletop character portrait nearly broke me. I needed a corporate sci-fi guy making a specific hand gesture. Two fingers splayed on one hand forming a “W” shape. Three fingers on the other forming a “Y.” Simple request, right?

Twenty minutes of prompt engineering later, AI still couldn’t grasp the concept. It generated hands facing the wrong direction. Wrong number of fingers. Fingers in completely different positions. Every single variation missed the mark.

So I gave up on AI entirely. Grabbed one generated image as reference. Cloned a finger in Photoshop. Moved it to the correct position. Adjusted lighting. Blended layers. Five minutes of manual work delivered exactly what I needed.

The AI gave me a starting point. But that starting point was no better than grabbing any random stock photo online. It failed the core task completely.

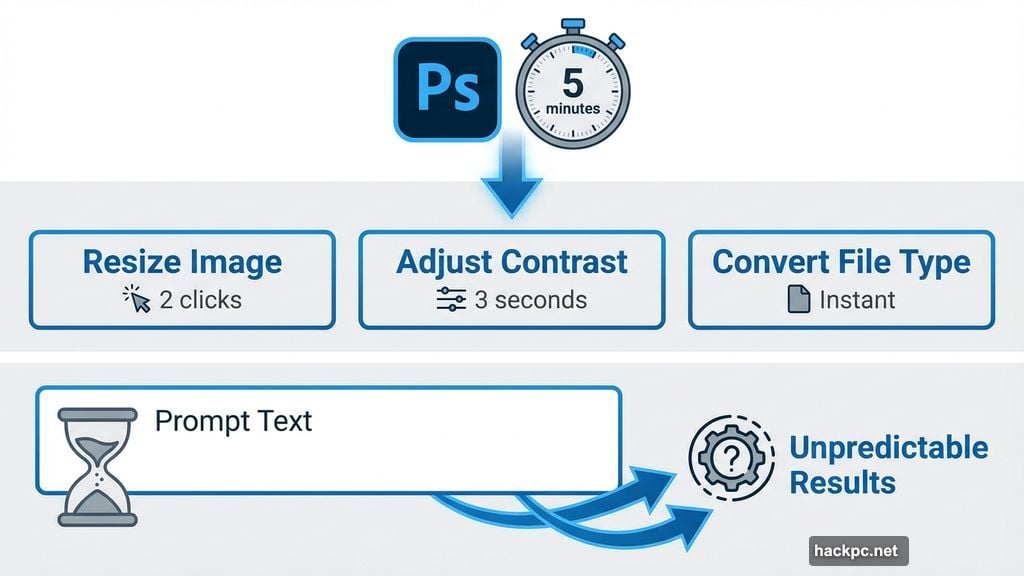

Speed Still Favors Manual Work

Daily Photoshop tasks take seconds. Resizing images? Two clicks. Adjusting contrast? Three seconds. Converting file types? Instant. Reframing compositions? Maybe 10 seconds if I’m being precise.

Why would I route these tasks through AI? The time spent crafting prompts exceeds the time I’d spend just doing the work myself. Plus, AI might randomly change aspects I didn’t ask it to touch.

That Coca-Cola Christmas ad disaster proved this at scale. The company spent more money on AI generation than traditional animation would’ve cost. Then they hired actual artists anyway to fix all the AI mistakes. The final product still looked terrible.

If Coca-Cola’s massive budget couldn’t make AI work for polished output, what hope do individual creators have?

Environmental Costs Nobody Mentions

Every AI image generation burns GPU cycles. That means electricity consumption. Server cooling requirements. Water usage for data center cooling systems.

Photoshop runs locally on my machine. The power draw stays minimal. No cloud computing overhead. No massive data centers spinning up to process my request.

When I can accomplish the same task faster, better, and with 1000x less environmental impact, the choice becomes obvious. AI generation for trivial tasks feels wasteful beyond justification.

Large Language Models Lack Understanding

Here’s the fundamental problem. AI doesn’t understand anything. It can’t parse instructions the way a human can. It pattern-matches based on training data and generates statistically probable outputs.

That’s why AI struggles with specificity. Ask for three fingers splayed in a particular way, and it generates what “hand gestures” typically look like in its training data. Your actual instruction gets lost in translation.

Traditional software follows explicit commands. Photoshop’s transform tool moves objects exactly where I tell them to go. The clone stamp copies precisely what I select. No interpretation. No hallucination. Just deterministic results.

Where AI Actually Helps

I’m not anti-AI. The technology does solve real problems in specific contexts.

Placeholder art for game prototypes? AI saves me hours of searching stock photo sites. Quick character portraits for RPG sessions? Sure, that works. Fun memes between friends? Absolutely.

But critical work? Anything requiring precision? Tasks where mistakes have consequences? I handle those manually every time.

AI can’t match the reliability, control, and speed of traditional tools for professional work. Maybe that changes in a decade. Maybe LLMs evolve into something fundamentally different. But today’s generative AI isn’t replacing Photoshop for anyone who needs consistent, accurate results.

The Real Value Proposition

AI works best as an ideation tool. It generates starting points for concepts I’ll refine manually later. Sometimes seeing 10 AI variations sparks ideas I wouldn’t have considered otherwise.

That’s genuinely useful. But it’s not revolutionary. It’s not replacing skilled work. It’s just another tool in the creative process, alongside mood boards, reference photos, and sketch thumbnails.

The hype suggested AI would eliminate image editing entirely. Reality delivered something far more modest. A sometimes-helpful assistant that still requires human judgment, skill, and manual cleanup for anything important.

I’ll keep using both. AI for quick throwaway content. Photoshop for everything that actually matters. That balance works, and I don’t see it changing anytime soon.

Comments (0)