AI video generators finally broke free from the cloud. And it happened faster than anyone expected.

Lightricks unveiled LTX-2, a new AI video model built with Nvidia that runs entirely on your device. No cloud servers. No waiting for remote computers to process your clips. Just pure, local AI video generation that doesn’t compromise quality.

This isn’t just a technical achievement. It’s a fundamental shift in who controls AI-generated content and how creators work.

Why Local AI Video Matters More Than You Think

Most AI video tools force you into a waiting game. You type a prompt, submit it to the cloud, and hope the servers aren’t overloaded. Then you wait 1-2 minutes for results.

Meanwhile, your prompt and video data flow through company servers. Those companies can analyze your work, train their models on your ideas, or store your content indefinitely. Creators lose control the moment they hit “generate.”

LTX-2 changes that equation completely. With Nvidia’s RTX chips handling the processing, your laptop becomes a self-contained AI video studio. Nothing leaves your machine. Your intellectual property stays yours.

For big entertainment studios diving into generative AI, that’s not just convenient. It’s essential. Studios can’t risk their unreleased projects or proprietary techniques leaking through cloud services. Local processing solves that problem entirely.

The Technical Specs That Make This Possible

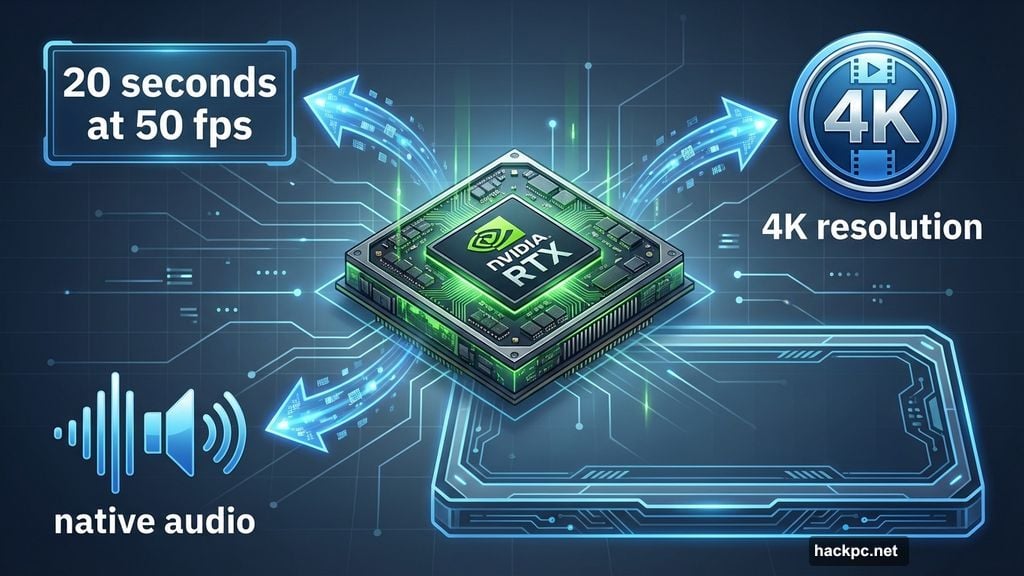

LTX-2 generates clips up to 20 seconds long at 50 frames per second. That puts it on the longer end of current AI video capabilities. Plus, it outputs in 4K resolution with native audio included.

Those specs matter for professional work. Most AI video tools produce clips that look decent on social media but fall apart on larger screens. LTX-2 targets the quality bar that filmmakers and studios actually need.

The model is also open-weight, meaning developers can see the architecture and adapt it for specific use cases. You won’t find that transparency with Google’s Veo 3 or OpenAI’s Sora. Those remain closed black boxes where you input prompts and hope for the best.

Open-weight models don’t reveal everything. Think of them like recipe ingredients without exact measurements. But they give developers enough information to build custom tools and improvements. That matters for companies that want AI video generation tailored to their specific workflows.

How Nvidia Made Local Generation Feasible

AI video generation consumes enormous computing power. Creating even 10-second clips normally requires data center resources. That’s why every major AI video tool runs in the cloud.

Nvidia’s RTX chips changed the math. These GPUs pack enough processing power to handle the intensive calculations locally. Combined with LTX-2’s optimized architecture, devices running RTX chips can match cloud-based quality without the latency.

The speed difference shows up immediately. Cloud-based tools take 1-2 minutes per clip minimum. Local generation eliminates network transfer time and server queues. For creators generating dozens of iterations, those minutes add up to hours saved.

Moreover, you’re not competing with other users for server access. Cloud services slow down when usage spikes. Your local GPU only serves you. That consistency matters when you’re working against deadlines.

Open-Weight Models vs Closed Systems

The AI industry loves talking about “open” models while keeping everything proprietary. True open-source requires disclosing training data, code, and architecture. Almost no AI video model meets that standard.

Open-weight models split the difference. Developers can access the model weights and architecture but not the training data or exact training process. It’s like getting the blueprint for a building without knowing the construction techniques.

LTX-2 is available now on HuggingFace and ComfyUI. Developers can download it, modify it, and build tools on top of it. That creates an ecosystem of custom implementations rather than forcing everyone through one company’s interface.

Compare that to Sora or Veo. Those tools only work through their respective company platforms. You get what they built, how they built it, with no customization options. For many creators, that’s fine. But studios and professional workflows need flexibility that closed systems can’t provide.

The Catch Nobody Mentions

Running LTX-2 locally requires serious hardware. You need an Nvidia RTX GPU, and not just any RTX chip. Higher-end models will perform better and generate faster.

That’s a significant investment for individual creators. A capable RTX-powered laptop starts around $1,500. Desktop setups with high-end RTX cards can easily exceed $2,000-$3,000. Cloud-based tools let you avoid that upfront cost.

However, cloud services charge per use. Generate enough videos, and you’ll eventually spend more than the hardware would cost. Plus, you’re renting access rather than owning the capability. If the service shuts down or raises prices, you’re stuck.

So the calculation depends on your usage. Casual creators probably benefit from cloud tools. Professionals generating videos daily will recover hardware costs quickly while gaining speed, privacy, and control benefits.

Professional Studios Get Their Biggest Win Yet

Entertainment studios face unique challenges with AI tools. They work with unreleased content worth millions. They develop proprietary techniques they can’t afford to leak. They need performance that doesn’t bottleneck production schedules.

Cloud-based AI video fails on all three counts. Studios must trust third-party services with sensitive material. They compete for server resources with millions of other users. They risk their techniques being absorbed into model training.

Local AI video generation solves every problem. Studios keep complete control over their content and workflows. They scale by adding more RTX-powered workstations rather than hoping cloud providers upgrade capacity. They protect intellectual property by keeping everything in-house.

That’s why Lightricks explicitly targeted professional creators with LTX-2. The 4K output, 20-second clips, and native audio support all aim at production-grade work rather than social media clips.

What This Means for the AI Video Industry

Every major AI video tool currently runs in the cloud. Google, OpenAI, Runway, Pika Labs—they all rely on centralized servers processing user requests. That centralization gives them control over access, pricing, and features.

LTX-2 proves local generation works at professional quality levels. That puts pressure on competitors to offer local options or explain why they won’t. Studios and professional creators now have a benchmark for what’s possible.

The shift mirrors what happened with AI image generation. Initially, all tools ran in the cloud. Then Stable Diffusion proved local generation worked. Now both cloud and local options coexist, serving different use cases.

AI video will likely follow the same path. Cloud tools won’t disappear—they’ll remain convenient for casual use. But local generation will become the standard for professional work that demands privacy, speed, and control.

The companies that adapt fastest will dominate the next phase of AI video. Those that cling to cloud-only models risk losing the most valuable customers: professional creators who generate content at scale.

Lightricks and Nvidia just forced everyone’s hand. Local AI video generation is no longer a distant possibility. It’s here, it works, and it’s already better than cloud alternatives for serious creators.

Comments (0)