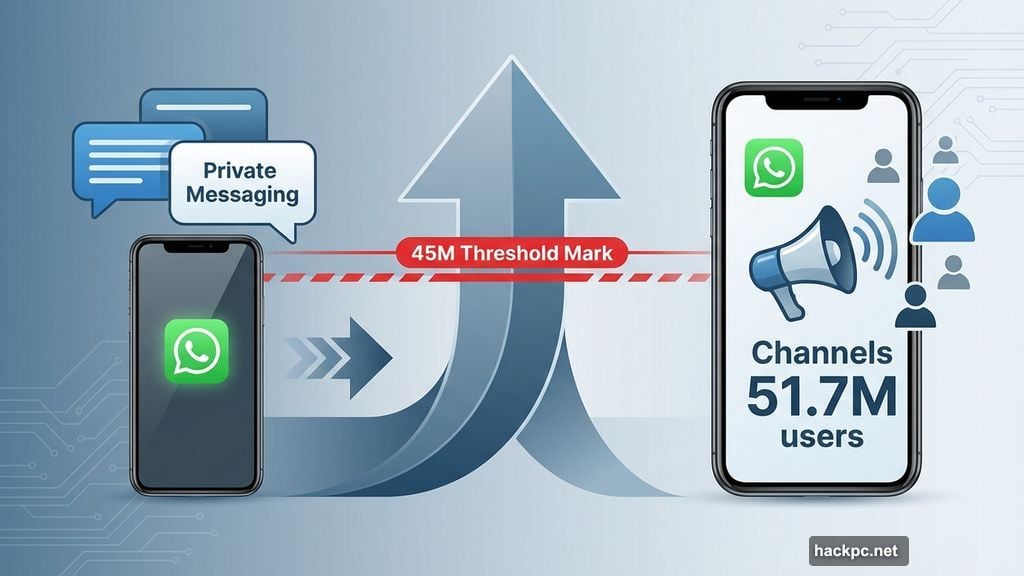

WhatsApp just triggered a regulatory tripwire in Europe. The app’s broadcasting feature hit 51.7 million monthly users across the EU in early 2025. That matters because it crossed the 45 million threshold that activates much stricter oversight.

Now Meta faces potential fines up to 6% of global revenue if regulators decide WhatsApp violates content moderation rules. Plus, the company already deals with multiple EU enforcement actions across its platforms.

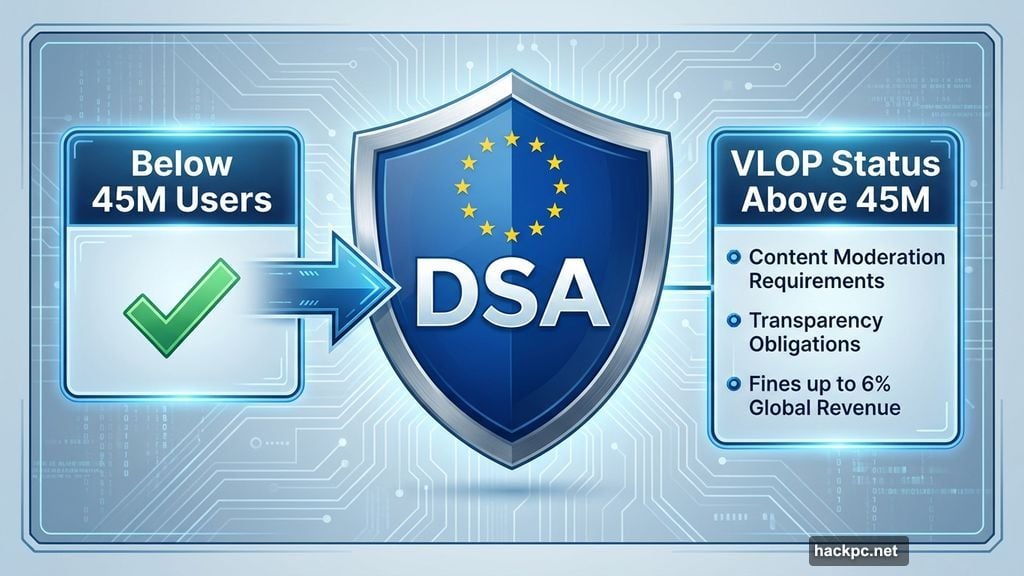

What Makes 45 Million Users So Important

The Digital Services Act draws a hard line at 45 million monthly active users in the European Union. Cross that number and your platform becomes a “very large online platform” or VLOP in regulatory speak.

But VLOP status isn’t just a label. It brings mandatory content moderation requirements, transparency obligations, and serious financial penalties for violations. In fact, companies can lose up to 6% of worldwide annual revenue for breaking DSA rules.

WhatsApp Channels hit 51.7 million average monthly users in the EU during the first half of 2025. So the feature clearly passed the threshold. Moreover, a European Commission spokesperson confirmed they’re “actively looking into” designating WhatsApp under the DSA.

Here’s the quote from a Commission daily briefing: “So here we would indeed designate potentially WhatsApp for WhatsApp Channels and I can confirm that the Commission is actively looking into it and I wouldn’t exclude a future designation.”

Why Channels Changed Everything

Traditional WhatsApp operates as private messaging between individuals and groups. Nobody regulates your personal text conversations. But WhatsApp Channels works differently.

The feature lets users broadcast one-sided posts to unlimited followers. Anyone can follow a channel and see updates. That architecture looks suspiciously similar to Meta’s other social platforms like Facebook and Instagram.

So regulators see Channels as a broadcasting tool, not private communication. And broadcast platforms face content moderation requirements that messaging apps avoid. This distinction matters enormously for regulatory purposes.

Meta launched WhatsApp Channels globally in September 2023. Growth accelerated through 2024 and into 2025. Now that growth triggered consequences Meta probably anticipated but hoped to delay.

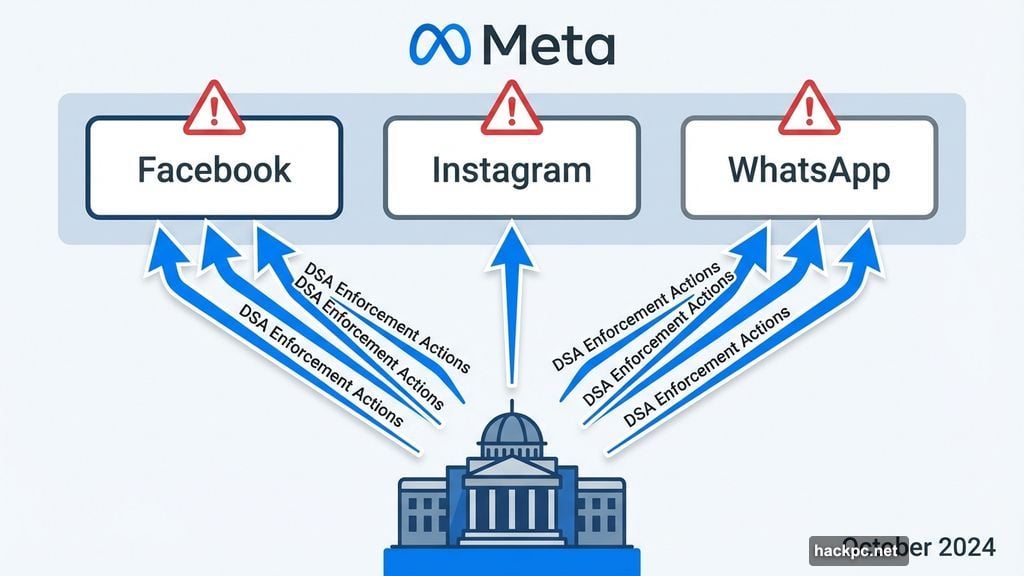

Meta’s Ongoing EU Regulatory Problems

WhatsApp’s possible designation adds to Meta’s existing European headaches. The company already faces multiple DSA-related enforcement actions across its platforms.

In October 2024, the European Commission charged Meta with DSA violations over how Facebook and Instagram handle illegal content reports. Regulators argued the reporting process didn’t meet transparency standards. That case continues.

Also in October 2024, a Dutch court ordered Meta to change timeline presentation on Facebook and Instagram for Netherlands users. The court ruled people couldn’t “make free and autonomous choices about the use of profiled recommendation systems.” Meta must now offer clearer opt-outs for algorithmic feeds.

These cases established patterns. European regulators scrutinize how Meta designs user interfaces, presents choices, and handles content moderation. So WhatsApp Channels designation fits an existing enforcement strategy.

Moreover, speculation about WhatsApp’s regulatory status started back in November 2024. Reuters first reported the 45 million user threshold issue then. But official Commission comments confirm active investigation now.

What Designation Actually Means for WhatsApp

If the Commission officially designates WhatsApp as a VLOP, Meta faces immediate compliance obligations. The company must implement content moderation systems that identify and remove illegal material quickly.

Plus, Meta needs to provide transparency reports about content removal, appeals processes, and automated moderation accuracy. Users must get clear explanations when their content gets removed. And the company can’t use “dark patterns” that manipulate user decisions.

But here’s the practical challenge. WhatsApp operates end-to-end encryption by default. Messages between users remain private. So how does Meta moderate Channels content while maintaining encryption promises?

The answer probably involves client-side scanning or user reporting mechanisms. Meta can scan content before encryption or rely on community reports. But both approaches face privacy criticism from digital rights advocates.

Furthermore, Meta must decide whether to change how Channels works in the EU specifically. The company could disable features, limit reach, or add friction to channel creation. Each option brings user experience tradeoffs.

The Revenue Risk Meta Can’t Ignore

Six percent of global revenue sounds abstract until you calculate actual numbers. Meta reported $134.9 billion revenue in 2023. Six percent equals roughly $8.1 billion.

That’s not a rounding error. It’s a business-altering penalty that would impact shareholder value, employee compensation, and strategic planning. So Meta takes DSA compliance seriously even when publicly contesting specific requirements.

However, the Commission rarely issues maximum penalties immediately. Initial fines typically start lower to encourage compliance. But repeat violations or deliberate non-compliance can trigger escalating penalties that approach the 6% ceiling.

Meta also faces reputational costs beyond fines. Each regulatory action generates negative press coverage and user trust concerns. Privacy advocates cite these cases when arguing against Meta’s platforms. Competitors use regulatory problems in marketing comparisons.

Still, Meta keeps growing despite European regulatory pressure. WhatsApp has 2 billion users globally. Facebook and Instagram maintain massive audiences. So regulatory fines hurt but don’t existentially threaten the business.

What Happens Next

The European Commission will likely issue a formal designation decision within months. Meta can contest the designation through EU courts, but the 51.7 million user count seems straightforward.

After designation, Meta gets a compliance deadline to implement required systems. The Commission will audit those systems and issue findings. If regulators find gaps, they’ll demand corrections before considering penalties.

Meanwhile, Meta’s lawyers will argue WhatsApp Channels differs from Facebook or Instagram. The company might claim encryption constraints prevent certain moderation approaches. And Meta will emphasize existing content policies already cover illegal material.

But the Commission established precedent with previous Meta cases. Regulators rejected similar arguments about user choice and content moderation. So Meta faces an uphill battle proving WhatsApp deserves different treatment.

For users, changes might appear subtle at first. Slightly different reporting interfaces, more explicit content policies, maybe restrictions on channel reach or discovery. But the fundamental Channel experience probably continues.

The bigger question is whether other messaging apps with broadcasting features face similar scrutiny. Telegram operates channels. Signal has “stories” features. Discord runs community servers. Each could theoretically trigger VLOP designation if EU user counts grow high enough.

So WhatsApp’s situation creates precedent beyond Meta. Every platform with social features and European users now watches this case closely. The outcome shapes how they design, launch, and grow broadcasting tools in the EU market.

Meta hasn’t commented publicly on the possible designation yet. Standard corporate practice suggests lawyers are crafting carefully worded responses that admit nothing while appearing cooperative. But silence from Meta means they’re taking this seriously.

Comments (0)