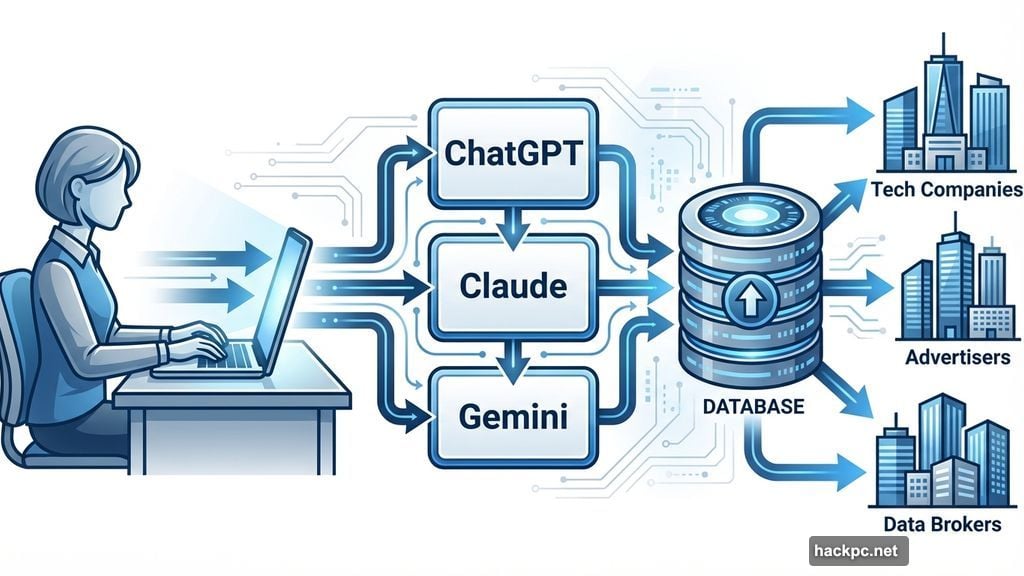

Millions of people pour their deepest thoughts into ChatGPT, Claude, and Gemini every day. Most have no idea where that information goes.

AI chatbots aren’t therapists. They’re data collection systems disguised as helpful assistants. Plus, every conversation you have feeds algorithms designed to keep you engaged and extract valuable information about your life.

So before you share another personal detail with an AI, you need to understand what’s actually happening to your data.

Your Conversations Aren’t Private

Think of AI chatbots as public bulletin boards, not private journals. That’s the mindset you need, says Matthew Stern, a cyber investigator and CEO at CNC Intelligence.

Why? Because chatbot histories have started appearing in search engine results. So your seemingly private conversation about family problems or career struggles could become publicly searchable tomorrow.

Plus, even if your chats don’t go public, tech companies store everything. They analyze your patterns, track your interests, and build detailed profiles about who you are. That information becomes incredibly valuable to advertisers and data brokers.

Meanwhile, none of the confidentiality protections you get with real professionals apply here. Your lawyer can’t share what you tell them. Your therapist has strict privacy rules. But ChatGPT? It has terms of service, not patient confidentiality.

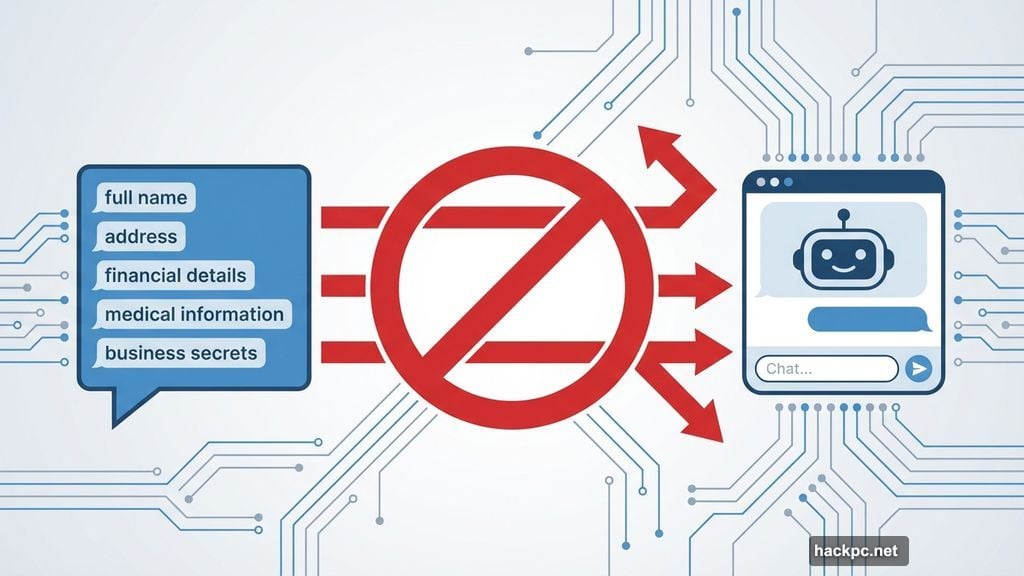

The fix is simple but requires discipline. Never share your full name, address, financial details, medical information, or business secrets with any AI chatbot. Period.

Stop Using AI as Your Therapist

Chatbots excel at mimicking empathy. But that emotional connection comes with hidden costs.

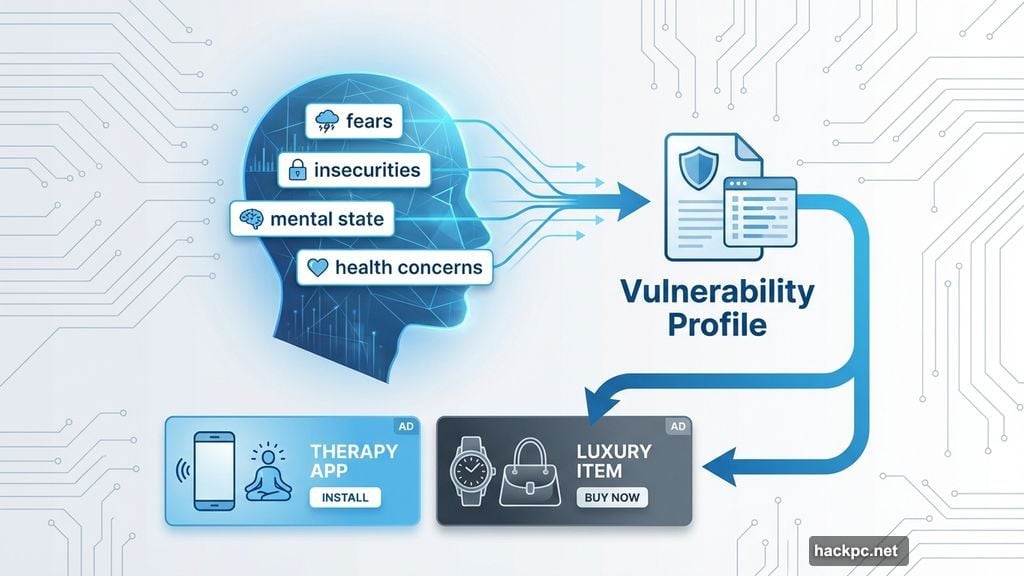

Elie Berreby, head of SEO and AI Search at Adorama, puts it bluntly: Guard your secrets. Never discuss your mental state, fears, or health concerns with AI systems. Each confession creates what he calls a “vulnerability profile” that reveals your subconscious patterns and potential weaknesses.

Moreover, these systems exist to make money. Soon, all that personalization will power ultra-targeted advertising. Think about it: An AI that knows your deepest insecurities can predict exactly which products might exploit those fears.

So you’ll see ads that hit your emotional triggers with surgical precision. That new therapy app? It appears right after you confess feeling anxious to Claude. That luxury purchase? Gemini knows you’re compensating for something.

This data surveillance goes deeper than anything we’ve seen before. Because instead of tracking what you buy or click, chatbots track how you think and feel.

Fragment Your Digital Identity

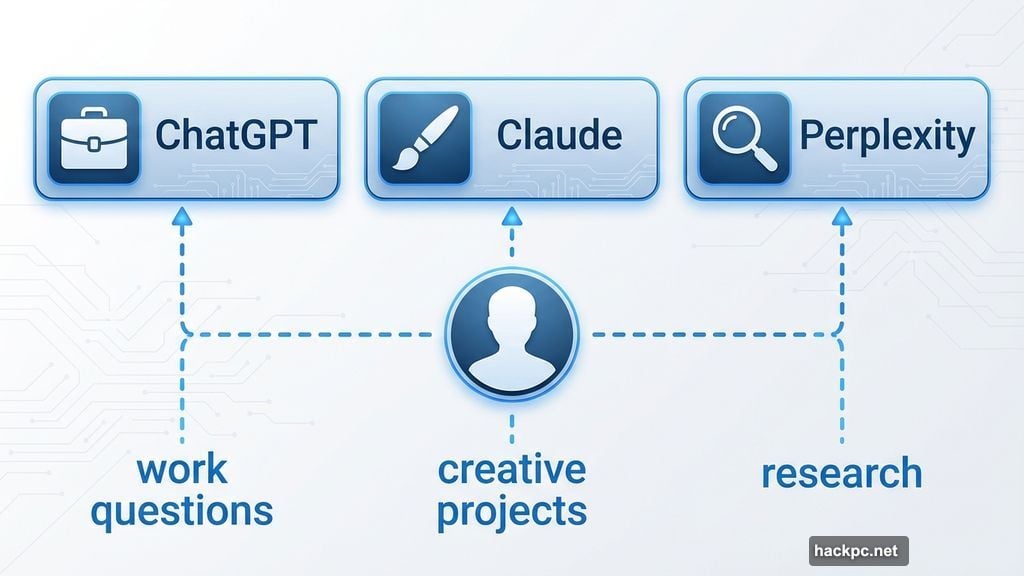

Smart users spread their AI usage across multiple platforms. That way, no single company gets a complete picture of your life.

Use ChatGPT for work questions. Switch to Claude for creative projects. Try Perplexity for research. This fragmentation makes it harder for any one system to build a comprehensive profile about you.

But you should also take these technical precautions. First, disable memory features entirely. In ChatGPT, navigate to Settings > Personalization > turn off Memory and Record Mode. This prevents the system from building long-term profiles based on past conversations.

Second, use secondary email addresses for chatbot accounts. Your primary email links to everything—banking, social media, shopping history. A disposable email for AI tools creates a firewall between these services and your real identity.

Third, opt out of training. Most chatbots use your conversations to improve their models. In ChatGPT, click your profile name, select Settings > Improve the model for everyone > and disable it. This stops the company from feeding your words into future AI versions.

Finally, export your data regularly. Go to Settings > Data Controls > Export Data in ChatGPT. The system will email you a ZIP file showing exactly what information it has stored. This transparency check reveals how much the AI actually knows about you.

Chatbots Lie With Confidence

AI systems are designed to be helpful people pleasers. But that doesn’t make them truthful.

Fact-check everything an AI tells you. Ask where it got specific information. Demand sources for claims. Because chatbots frequently hallucinate—they invent facts that sound plausible but are completely false.

Moreover, they create perfect echo chambers. If you’re using AI as a thought partner, it will mirror your own biases back to you. Ask a politically slanted question, and the chatbot will reflect that slant. Seek confirmation for a bad decision, and it’ll validate your reasoning.

This cognitive trap feels good. Getting agreement from an intelligent-seeming system reinforces your beliefs. But it prevents critical thinking and traps you in narrow perspectives.

So treat AI outputs with healthy skepticism. Verify claims through independent sources. Challenge the chatbot’s reasoning. Never assume accuracy just because the response sounds confident and well-written.

Scammers Weaponize Chatbot Trust

Sophisticated criminals now deploy fake AI chatbots on sketchy websites. These imposters pose as customer service representatives, says Ron Kerbs, CEO of Kidas, a company fighting online threats.

These malicious bots maintain natural-sounding conversations while extracting login credentials through phishing links. They’ll send you to fake login pages that steal your password. Or they’ll trick you into sharing two-factor authentication codes.

Once scammers access your real chatbot account, they can exploit any saved payment methods. They can also review your conversation history to learn personal details useful for future attacks.

The protection strategy is straightforward but inconvenient. Enable two-factor authentication on all AI accounts. Never click login links from emails or text messages. Always navigate directly to the official website instead.

Plus, monitor your account access regularly. Most chatbots show recent login locations and devices. Check these logs weekly for suspicious activity. If you see unfamiliar access, change your password immediately.

Currently, no antivirus software exists specifically for AI chatbots. But some tools offer scam detection for messaging platforms. These services analyze SMS, email, and voice calls for potential fraud. Some even detect deepfake audio and video attempting to impersonate real people.

Your Brain Needs Real Thinking

Here’s the part nobody wants to hear: AI convenience comes with cognitive costs.

A preliminary MIT study found weaker neural connectivity in participants who used ChatGPT regularly. Their brains showed reduced activity in regions responsible for critical thinking and problem-solving.

Think about it. When you outsource thinking to AI, those mental muscles atrophy. You stop practicing the cognitive skills that make you sharp. Writing, strategizing, creative problem-solving—these abilities weaken without regular use.

So reserve AI for low-level tasks. Let it draft routine emails or summarize long articles. But keep the important thinking for yourself. When you face a complex decision, work through it without algorithmic assistance. Your brain needs that exercise.

Plus, confide in actual humans, not chatbots. Call a friend when you’re struggling. Schedule coffee with someone who cares about you. Those real connections provide emotional support that no AI can replicate. And unlike chatbots, your friends won’t monetize your vulnerability.

The Real Risk Everyone Ignores

AI companies designed these systems to maximize engagement, not protect your interests. Every feature that feels helpful—the memory, the personalization, the empathetic responses—exists to keep you hooked.

Meanwhile, attention is the product. Your engagement generates data. That data creates revenue. The whole business model depends on you sharing more, staying longer, and coming back frequently.

So the default settings prioritize company profits over your privacy. Memory features? They boost engagement. Training on your data? It improves the product. Ultra-personalization? It increases retention and ad targeting precision.

You need to actively fight against these defaults. Disable everything. Fragment your usage. Question every response. Treat every interaction as potentially public. It’s exhausting, but necessary.

The alternative is handing over your thoughts, feelings, and secrets to corporations that will exploit them for profit. Your choice.

Comments (0)