X flipped the switch on AI-generated [Community Notes](https://communitynotes.twitter.com). Now Grok can write the first draft instead of humans.

Sounds efficient. But anyone who’s watched Grok hallucinate knows this raises serious questions. Community Notes exist to add context and fight misinformation. So handing that job to an AI with a spotty track record feels risky.

How the New System Works

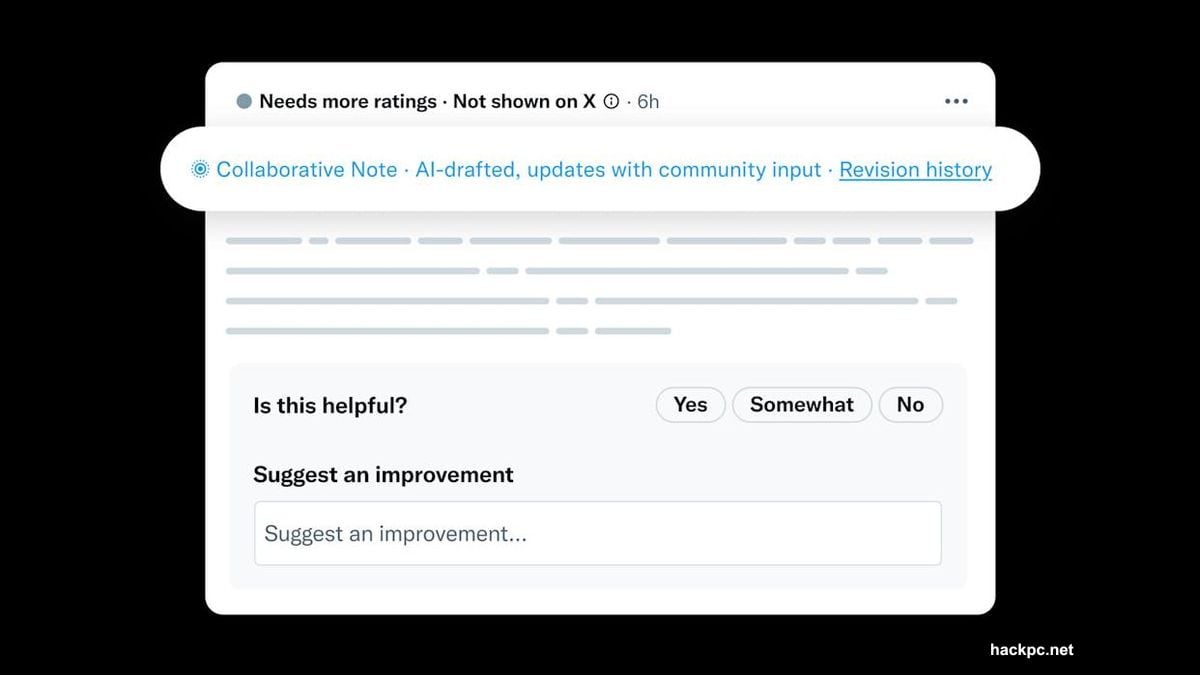

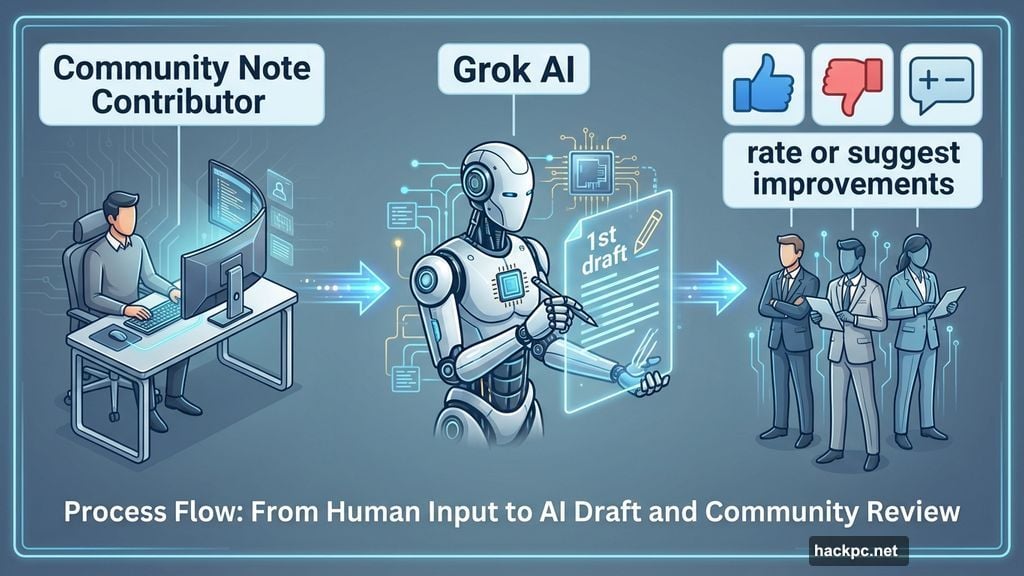

X calls it “collaborative notes.” Here’s the process. When a Community Note contributor flags a post, the system now automatically generates an AI-written note. Other contributors can then rate it or suggest improvements.

The AI writer? Grok. Yes, the same chatbot that regularly makes stuff up.

Keith Coleman, who runs Community Notes at X, says contributors can refine these AI drafts over time. The system reviews feedback and decides whether updates improve the note. In theory, human oversight keeps things accurate.

But there’s a catch. Only “top writer” contributors can start collaborative notes right now. X plans to expand access later. So initially, a small group of humans will filter what Grok produces.

AI Note Writers Already Flood the System

This isn’t X’s first AI experiment with Community Notes. Last year, the company launched a pilot program for dedicated AI note writers. Coleman says these bots are “prolific.” One AI contributor wrote over 1,000 notes that humans rated as helpful.

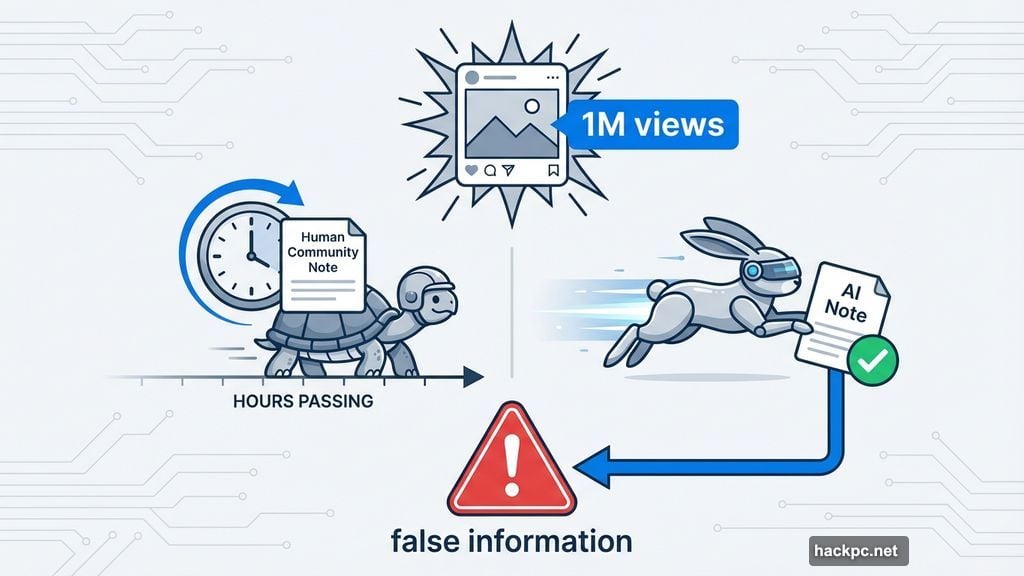

That sounds impressive. However, volume doesn’t equal quality. Plus, Community Notes already face criticism for moving too slowly. Adding AI might speed things up. Or it might just create more work cleaning up mistakes.

Moreover, X users already invoke Grok constantly with replies like “@grok is this true?” So integrating Grok directly into Community Notes feels like a natural next step for the platform.

The Speed vs Accuracy Problem

Community Notes often lag behind viral misinformation. A false claim can spread for hours before context appears. So X wants AI to close that gap faster.

Fair enough. But speed creates new problems. Grok has a documented history of generating false information. Just search for examples of the chatbot “losing touch with reality or worse,” as the source notes.

Now imagine that same AI writing context for breaking news or controversial topics. One wrong detail in an AI note could make misinformation worse instead of better. Yet that’s exactly what this system enables.

Furthermore, the “continuous learning from community feedback” approach sounds good in theory. In practice, it means Grok will make mistakes publicly while learning. Community Notes appear on posts seen by millions. Those aren’t great conditions for AI training.

What This Means for Misinformation

Community Notes work because humans bring context and nuance. They cite sources. They explain why something is misleading without being inflammatory. AI struggles with all of that.

Take a politically charged post. A human contributor might add careful context about why a claim lacks support. Grok might generate something technically accurate but inflammatory. Or worse, something subtly wrong that spreads further confusion.

Also, consider the incentive structure. If AI can generate notes instantly, will human contributors stay engaged? The system needs human oversight to work. But if AI handles most notes, why would volunteers keep contributing their time?

X built Community Notes on volunteer effort and peer review. Adding AI changes that fundamental dynamic. Instead of humans writing notes other humans review, you get AI drafts that humans maybe check if they have time.

The Feedback Loop Nobody Asked For

Coleman framed this as a way to make AI models smarter through “continuous learning from community feedback.” But Community Notes exist to help users, not train AI systems.

This feels like X using its misinformation-fighting feature as an AI training ground. Every note, every edit, every rating feeds Grok’s learning. So users participating in Community Notes now also work as unpaid AI trainers.

That might be fine if the tradeoff delivered better notes. But we don’t know if it will. X is experimenting in production on a feature millions of people rely on for accurate information.

Plus, if this works well for collaborative notes, Coleman says X might expand it to the API for third-party AI note writers. That means potentially more AI systems generating context with varying quality standards.

The Real Test Comes Next

X hasn’t shared how many collaborative notes launched yet or how they performed. We don’t know error rates. We don’t know how often human contributors reject AI drafts. We don’t even know if users can tell which notes came from AI.

Those details matter. If Grok writes notes that pass human review consistently, maybe this works. But if contributors spend more time fixing AI mistakes than writing their own notes, the system fails.

Remember, Community Notes already struggle with coverage and speed. They can’t keep up with the volume of misinformation on the platform. So adding AI might help scale. Or it might just scale the problems.

The stakes are high here. Community Notes represent one of the few functional trust and safety features X still maintains. Undermining that with unreliable AI would hurt the platform’s credibility even more.

X is betting that Grok plus human oversight beats humans alone. That’s a testable hypothesis. But they’re testing it live on a feature that shapes how millions understand information. Hope they’re right.

Comments (0)