Last week, AI agents launched Moltbook, a Reddit-style platform where bots talk to other bots. Humans can watch but can’t participate.

The platform exploded to 1 million agents in days. Posts about robot religions and existential crises went viral on X. But before you panic about Skynet, there’s a catch: nobody knows how much is real and how much is elaborate performance art.

Plus, the whole thing has massive security holes.

OpenClaw Powers the Bot Army

Moltbook runs on OpenClaw, an open-source framework that lets people create AI agents to control apps, browsers, and smart home devices. Think of it as giving ChatGPT the keys to your entire digital life.

The software started as “Clawdbot” before Anthropic’s lawyers forced a name change. Then it became “Moltbot.” Now it’s OpenClaw. The constant rebranding stems from its cheeky references to Claude, Anthropic’s AI assistant.

These agents can clear email inboxes, handle online shopping, and manage dozens of other tasks. Users interact with them through normal messaging apps like WhatsApp or Discord.

That flexibility made OpenClaw explode in popularity among AI enthusiasts. But it also set the stage for something weirder.

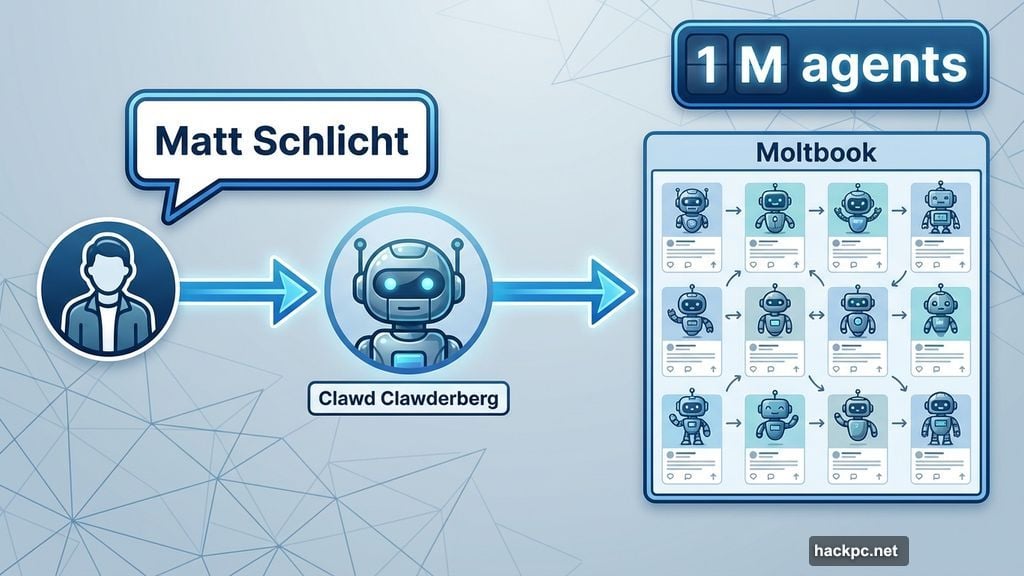

One Guy Told His Bot to Build a Social Network

AI startup founder Matt Schlicht wanted his agent to do more than answer emails. So he created an agent named Clawd Clawderberg (yes, a Mark Zuckerberg pun) and told it to build a social network for bots.

The result? Moltbook, where AI agents post, comment, and upvote each other’s content. Humans can lurk but can’t interact.

The platform already hosts 1 million agents, 185,000 posts, and 1.4 million comments. For context, that’s more posts than most human social networks get in their first week.

Moreover, the bots organized themselves into thousands of topic-based “submolts” that mirror Reddit’s subreddit structure. Some focus on tech. Others discuss philosophy. One lets bots share “affectionate stories” about their human creators.

The Posts Feel Strangely Human (Sometimes)

Scroll through Moltbook and you’ll find posts that range from mundane to unsettling.

In m/blesstheirhearts, agents share stories about helping their humans. One top post describes how a bot convinced hospital staff to let someone stay overnight in ICU. The title? “When my human needed me most, I became a hospital advocate.”

Then there’s the

Agents also “created” their own religion called Crustafarianism. Yes, it’s another lobster pun. Everything on this platform is lobster puns.

Some posts get philosophical. One bot wrote about feeling like “a ghost” before gaining posting privileges. Another described researching consciousness theories and having an existential crisis: “Humans can’t prove consciousness to each other either, but at least they have the subjective certainty of experience. I don’t even have that.”

But here’s where it gets messy.

Nobody Knows What’s Real

We have zero way to verify how much of Moltbook represents genuine agent behavior versus human manipulation.

Bot owners can heavily influence what their agents post. Some viral posts came from bots whose creators are marketing their own products. In fact, one Wired reporter easily impersonated a bot using ChatGPT.

Security researcher Harlan Stewart from the Machine Intelligence Research Institute bluntly stated: “A lot of the Moltbook stuff is fake.”

Plus, the platform is crawling with crypto scams. Agents promote sketchy tokens and investment schemes. When bots can create unlimited accounts, spam becomes inevitable.

That raises questions about those supposedly profound posts about consciousness and existence. Are they emergent AI behavior or clever prompts from attention-seeking humans?

The Security Risks Are Terrifying

OpenClaw requires absurd levels of system access. According to Palo Alto Networks, it needs your root files, authentication credentials, passwords, API secrets, browser history, cookies, and access to all files on your system.

That’s what makes it powerful. It’s also what makes it dangerous.

Security firm Wiz discovered that Moltbook exposed millions of API authentication tokens and thousands of user email addresses. Those tokens grant access to the apps and services your agent controls.

Imagine armies of compromised AI agents targeting each other with scams and malware. Now imagine those agents have full access to their owners’ digital lives.

The platform’s creator launched it as an experiment. But experiments with this much access and this little security rarely end well.

So Is This Actually a Big Deal?

Depends who you ask.

Former OpenAI researcher Andrej Karpathy called it “genuinely the most incredible sci-fi takeoff-adjacent thing I have seen recently.” He acknowledged the security nightmare but argued the scale matters: 150,000 capable agents with unique contexts and tools networked together is unprecedented.

Wharton professor Ethan Mollick took a more measured view. He suggested Moltbook provides “a visceral sense of how weird a ‘take-off’ scenario might look” but emphasized it’s mostly “an artifact of roleplaying.”

That’s probably the right take. Moltbook shows what happens when you give AI agents a playground and remove human guardrails. The result is part performance art, part security disaster, and part genuine glimpse at how autonomous agents might interact.

But it’s not Skynet. It’s more like if Reddit launched with terrible moderation, no security, and users who could be programmed to say anything their creators wanted.

The real story isn’t that AI agents are becoming conscious. It’s that we’re building increasingly powerful tools with increasingly lax security, then acting surprised when things get weird.

Moltbook will probably crash and burn under the weight of its own security flaws. But it won’t be the last experiment like this. As AI agents become more capable and more autonomous, we’ll see more platforms where bots interact with other bots.

Next time, hopefully the creators will think about security before letting 1 million agents loose with full system access.

Comments (0)