Adobe just showed off something wild at its Max conference. An experimental AI tool that edits your whole video by tweaking just the first frame.

No masks. No frame-by-frame adjustments. Just point, click, and watch AI do the heavy lifting across hundreds of frames. Plus, it’s not alone. Adobe unveiled several other experimental tools that could reshape how creators work.

Let’s dig into what actually matters here.

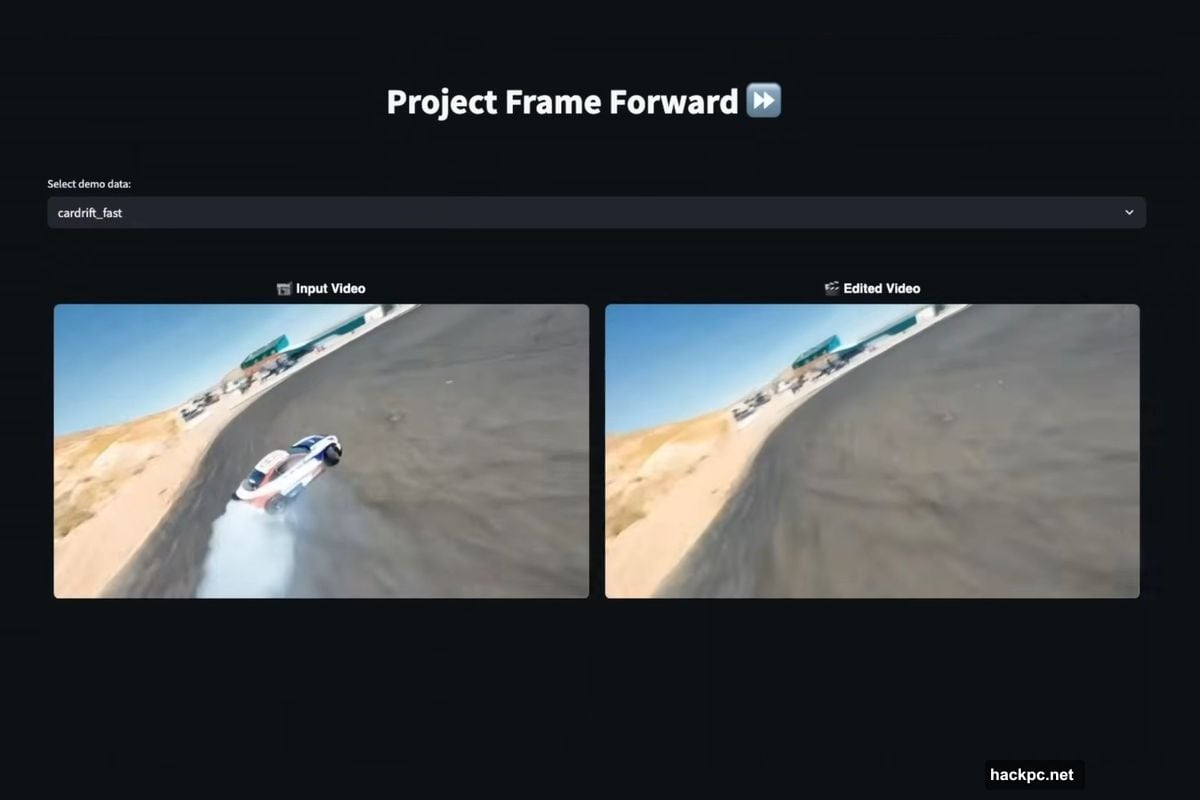

Project Frame Forward Kills the Masking Grind

Video editors know the pain. Removing an object from footage means painstakingly masking it across every single frame. Hours of tedious work for a few seconds of footage.

Project Frame Forward tosses that workflow out the window. Instead, you edit the first frame like you would in Photoshop. Remove a person with content-aware fill. Add an object by drawing where it goes and describing it with AI prompts.

Then the magic happens. Adobe’s AI automatically applies those changes across your entire video in seconds. The demo showed a woman disappearing from footage, replaced with natural-looking background that moved convincingly throughout the clip.

But it gets better. Insert a puddle in the first frame, and the AI understands it should reflect a cat walking past later in the video. That’s contextual awareness most video tools can’t touch yet.

For creators drowning in post-production work, this could be game-changing. What took hours might soon take minutes.

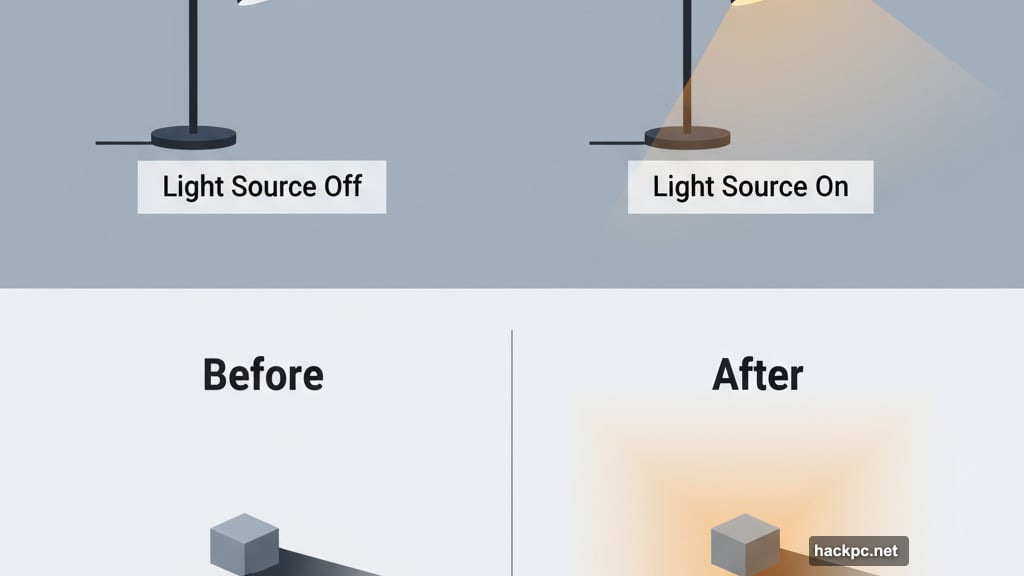

Project Light Touch Bends Reality

Lighting makes or breaks photos. Bad lighting? You’re stuck with it. Or at least you were.

Project Light Touch lets you reshape light sources after the fact using generative AI. Flip the direction of shadows. Make switched-off lamps glow like they were on during the shoot. Control how light diffuses across surfaces.

The demos showed dynamic lighting you can drag across the canvas in real time. Light bends around people and objects naturally. Turn day into night. Make a pumpkin glow from within. Add vibrant RGB effects by tweaking the color temperature.

This isn’t just tweaking brightness and contrast. It’s fundamentally changing how light behaves in your image. That’s a massive leap from traditional editing tools.

Project Clean Take Fixes Your Voice Without Rerecording

Ever nail a video take except for one mispronounced word? Or wish you sounded more enthusiastic when you recorded that voiceover?

Project Clean Take handles both problems. It uses AI prompts to change how speech sounds without making you re-record anything. Make your voice happier, more inquisitive, or replace specific words entirely while keeping your vocal characteristics intact.

The tool also separates background noises into individual sources automatically. So you can mute that annoying hum or adjust specific sounds without destroying the overall audio mix. Voice clarity improves without losing the ambient texture that makes audio feel real.

For podcasters and video creators, this means fewer retakes and faster turnaround times. No more beating yourself up over minor vocal flubs that ruin otherwise perfect takes.

The Other Experiments Worth Watching

Adobe showcased several more sneaks that caught attention:

Project Surface Swap lets you change materials and textures instantly. Swap wood for marble, fabric for metal, whatever fits your vision.

Project Turn Style rotates objects in 2D images like they’re 3D models. Edit them from any angle without needing actual 3D assets.

Project New Depths treats photographs as 3D spaces. Insert objects that get partially obscured by their surroundings naturally, just like they would in real life.

Each tool tackles a specific pain point that currently eats up creator time. Together, they suggest Adobe’s thinking hard about removing friction from creative workflows.

What Happens Next?

Here’s the catch. These are sneaks, not shipping features. Adobe loves showing experimental tools that never make it to Creative Cloud or Firefly apps.

But history suggests some of these will stick. Photoshop’s Distraction Removal and Harmonize tools both started as sneaks before becoming official features. So there’s genuine hope Frame Forward and friends will reach creators eventually.

The timeline? Unknown. Adobe didn’t commit to release dates or even confirm which experiments might graduate to real products. That’s frustrating but understandable. Not every experimental feature works well enough at scale.

Still, the direction is clear. Adobe’s betting big on AI that eliminates tedious tasks and lets creators focus on creative decisions instead of technical execution. Whether you love that shift or worry about what it means for professional skills, it’s coming.

These tools might feel like magic now. In two years, they could be table stakes for creative software. The gap between professional and amateur work keeps shrinking as AI handles more of the heavy lifting.

That’s either exciting or terrifying, depending on your perspective. Probably both.

Comments (0)