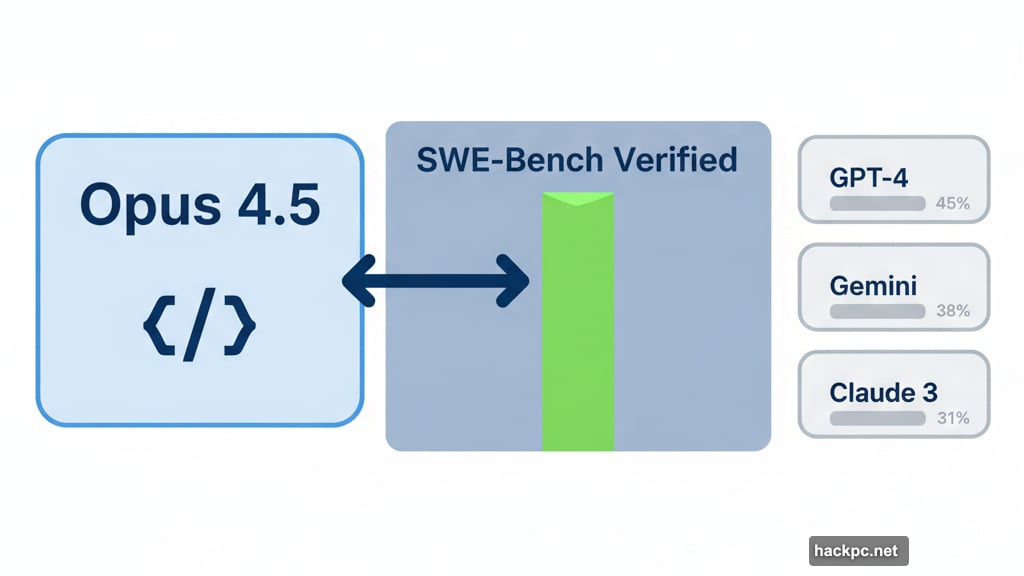

Anthropic just dropped Opus 4.5, and the numbers are wild. This model became the first AI to crack 80% on SWE-Bench verified, a coding benchmark that’s stumped every other model until now.

The release completes Anthropic’s 4.5 series rollout. Sonnet 4.5 shipped in September. Haiku 4.5 followed in October. Now Opus 4.5 brings the power.

But here’s what makes this launch different. Anthropic isn’t just releasing a model. They’re shipping real integrations people can use today.

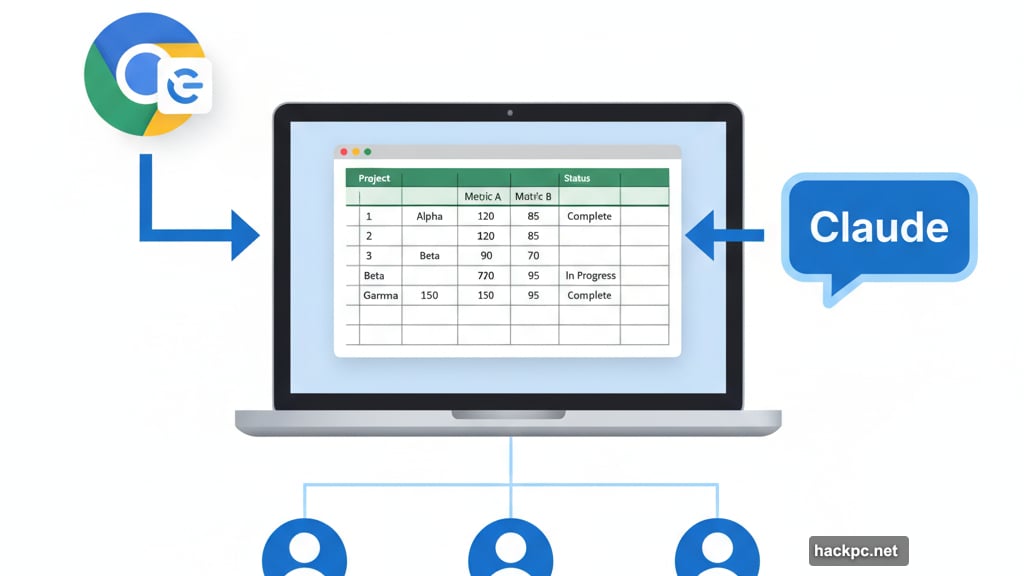

Claude Moves Into Your Browser

Chrome users get Claude built directly into their browser. No more tab switching to ask questions or analyze code.

The Chrome extension launches for all Max subscribers immediately. It runs locally in your browser, giving Claude direct access to what you’re viewing. That means instant context on any webpage without copying and pasting.

Plus, Anthropic opened up Claude for Excel to Max, Team, and Enterprise users. The spreadsheet integration handles complex data analysis inside Excel itself. No export required.

These aren’t pilot programs anymore. They’re production features available right now.

Memory Got Serious Upgrades

Context windows alone don’t solve everything. Anthropic learned that the hard way.

So Opus 4.5 includes major memory improvements. The model now compresses context intelligently instead of just storing everything. It remembers the right details, not just more details.

“Knowing the right details to remember is really important in complement to just having a longer context window,” Dianne Penn, Anthropic’s head of product management for research, told TechCrunch.

That upgrade enabled “endless chat” for paid users. Your conversations won’t stop when you hit the context limit. Instead, Claude compresses its memory seamlessly. You’ll never see an interruption message again.

Agents Need Better Working Memory

Many Opus 4.5 improvements target agentic workflows. Think lead agents directing teams of Haiku-powered sub-agents.

Those scenarios demand rock-solid working memory. An agent exploring large codebases needs to track what it checked, what worked, and when to backtrack. That’s where the memory upgrades really matter.

“Claude needs to be able to explore code bases and large documents, and also know when to backtrack and recheck something,” Penn explained.

The model now handles multi-agent coordination naturally. One Opus agent manages several Haiku agents without losing track of progress or context.

Benchmark Performance Tells the Story

Opus 4.5 dominates across major benchmarks. It scored over 80% on SWE-Bench verified, beating every previous model. That’s huge for developers who need AI that actually writes working code.

But the wins go beyond coding. Tool use benchmarks (tau2-bench and MCP Atlas) show strong performance. General problem solving tests (ARC-AGI 2, GPQA Diamond) confirm broad capabilities.

Terminal-bench results prove Opus 4.5 understands command-line environments. That matters for DevOps workflows and system administration tasks.

The benchmark sweep suggests Anthropic focused on practical capabilities over narrow optimization. Every improvement serves real-world use cases.

Competition Heats Up Fast

Opus 4.5 faces tough rivals. OpenAI released GPT 5.1 on November 12. Google shipped Gemini 3 on November 18.

So three frontier models launched within two weeks. That’s unprecedented competition density. Each company claims state-of-the-art performance on different benchmarks.

What separates them? Integration depth matters more than raw scores. Anthropic bet on Chrome and Excel because that’s where people actually work. OpenAI focuses on ChatGPT improvements. Google pushes Workspace integration.

The winner depends on your workflow. Choose the model that integrates with tools you already use daily.

Real Integration Beats Raw Power

Here’s my take after tracking AI releases for years. Model capabilities matter less than integration quality.

Opus 4.5 might benchmark higher than competitors. But that advantage disappears if using it requires constant context switching. Anthropic gets this.

Chrome and Excel integrations put Claude where people work. No API calls. No separate apps. Just AI assistance inside familiar tools.

That’s smarter than chasing benchmark scores. Build where users already spend their time. Make AI invisible infrastructure instead of another app to manage.

The endless chat feature shows similar thinking. Technical solution (better memory compression) enables seamless experience (uninterrupted conversations). That’s product design that respects user workflow.

Comments (0)