ByteDance just learned what happens when you let AI recreate celebrities without permission. Turns out, Hollywood lawyers move fast.

The company released Seaart 2.0 less than a week ago. Within days, a viral clip showing AI-generated versions of Tom Cruise and Brad Pitt fighting spread across social media. Artists erupted in anger. Plus, major studios started sending cease-and-desist letters almost immediately.

Now ByteDance promises to fix the problem. But their response raises more questions than it answers.

The Viral Clip That Changed Everything

One video broke the internet and changed the conversation around AI video generation.

The clip showed what appeared to be Tom Cruise and Brad Pitt in a realistic fight scene. Neither actor participated. Neither gave permission. Yet the AI tool made it anyway.

Artists and creators saw this as proof their worst fears were justified. AI companies could now recreate anyone’s likeness without consent. Moreover, the technology worked well enough to fool casual viewers.

So the backlash came swift and fierce. Social media filled with criticism. Industry professionals called it theft. And Hollywood’s legal teams went to work.

Disney Drops the Legal Hammer

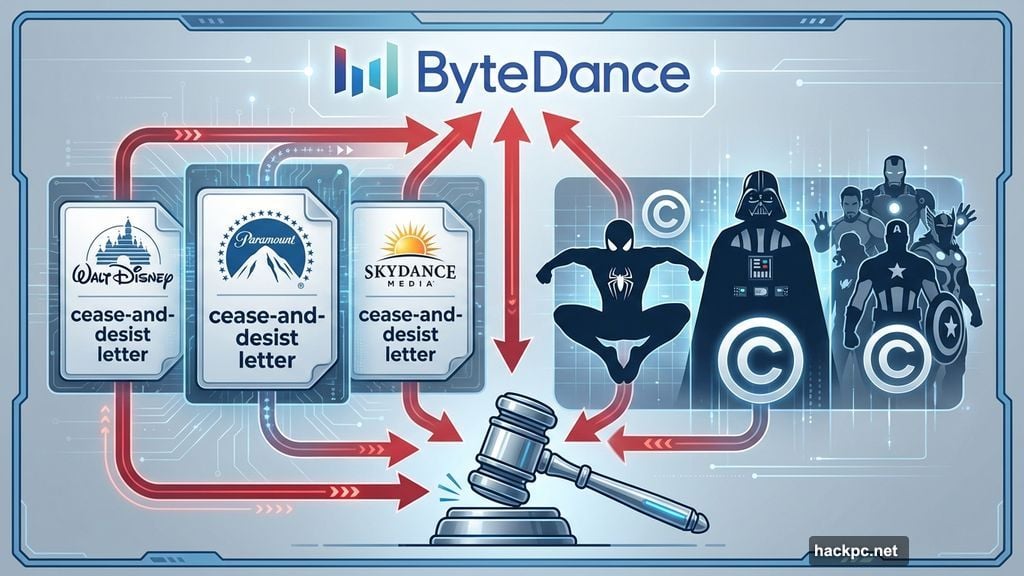

Disney didn’t waste time. The entertainment giant fired off a cease-and-desist letter on Friday.

Their complaint was specific and damning. Disney claimed Seaart 2.0 uses “a pirated library of Disney’s copyrighted characters from Star Wars, Marvel, and other Disney franchises.” They accused ByteDance of treating their intellectual property like “free public domain clip art.”

The letter included evidence. Disney provided example videos featuring Spider-Man and Darth Vader created without authorization. These weren’t vague imitations. They were recognizable characters from Disney’s most valuable franchises.

Furthermore, Paramount Skydance reportedly sent its own cease-and-desist letter. ByteDance now faces legal pressure from multiple major studios. That’s a problem money alone can’t solve.

ByteDance’s Vague Promise to Do Better

ByteDance’s response sounds like damage control without a real plan.

The company told the BBC they’re “taking steps to strengthen current safeguards.” They claim to respect intellectual property rights and acknowledge concerns about Seaart 2.0. But when reporters asked for specifics about how they’d fix the problem, ByteDance went silent.

That’s concerning. Vague promises don’t stop copyright infringement. They don’t protect artists. And they certainly won’t satisfy Disney’s legal team.

Here’s what ByteDance didn’t explain. How will they prevent users from generating copyrighted characters? What technology will detect and block prohibited content? When will these safeguards actually work?

Without answers to these questions, their statement means nothing. It’s a PR move designed to buy time while they figure out an actual solution.

The Real Problem Nobody’s Addressing

ByteDance isn’t alone in this mess. Every major AI video generator faces the same fundamental issue.

These tools trained on vast amounts of copyrighted content. They learned to recreate characters, faces, and styles by analyzing existing media. But the companies building these tools never got permission from the copyright holders.

So now they’re stuck. They can’t remove the training data. The AI already learned from it. Plus, filtering outputs only works if the system can identify prohibited content accurately. That’s incredibly difficult when users can subtly modify prompts to bypass filters.

Some companies try watermarking AI-generated content. Others limit what users can create. But these measures feel inadequate when a single viral clip can generate this much controversy.

What Artists Actually Want

The creative community’s demands are clear. They want control over their work and likeness.

Artists argue that AI companies should get explicit permission before training on copyrighted material. They want compensation when their style or work influences AI outputs. And they demand the ability to opt out entirely.

Currently, none of these protections exist in most AI video tools. Companies like ByteDance release products first and deal with legal consequences later. That approach worked in tech for years. But Hollywood has resources and lawyers that can fight back effectively.

Moreover, artists point out the economic threat. If anyone can generate professional-quality videos featuring any character or celebrity, what happens to the people who create this content for a living? That’s not a hypothetical concern anymore.

The Legal Battle Just Started

ByteDance’s problems won’t end with a few cease-and-desist letters.

Disney doesn’t send legal threats casually. Neither does Paramount. These companies protect their intellectual property aggressively because it’s worth billions. So expect lawsuits if ByteDance doesn’t comply quickly and completely.

Furthermore, this case could set precedents for the entire AI industry. If Disney wins, other AI video generators face similar legal risks. That might force the whole sector to rethink how they train models and what content they allow users to create.

Some legal experts believe copyright law already covers this situation. Others argue that AI-generated content exists in a gray area current laws don’t adequately address. Courts will need to decide. But that process takes years.

In the meantime, ByteDance and competitors face an impossible choice. Lock down their tools so heavily that users abandon them. Or keep pushing boundaries and risk massive legal liability.

Why This Matters Beyond ByteDance

This controversy represents a turning point for generative AI.

For years, AI companies operated with minimal oversight. They scraped the internet for training data without asking permission. They released tools that could replicate copyrighted work without consequence. Now the bill is coming due.

Hollywood’s response to Seaart 2.0 shows that major rights holders won’t tolerate unauthorized use of their property. They have the money and motivation to fight. Plus, they’re winning the public relations battle by framing this as protecting artists from exploitation.

That changes the game for every AI company. They can’t hide behind “innovation” when they’re enabling copyright infringement at scale. They need real solutions, not vague promises about strengthening safeguards.

The technology isn’t going away. But how companies implement it will change dramatically. ByteDance just learned that lesson the hard way.

Comments (0)