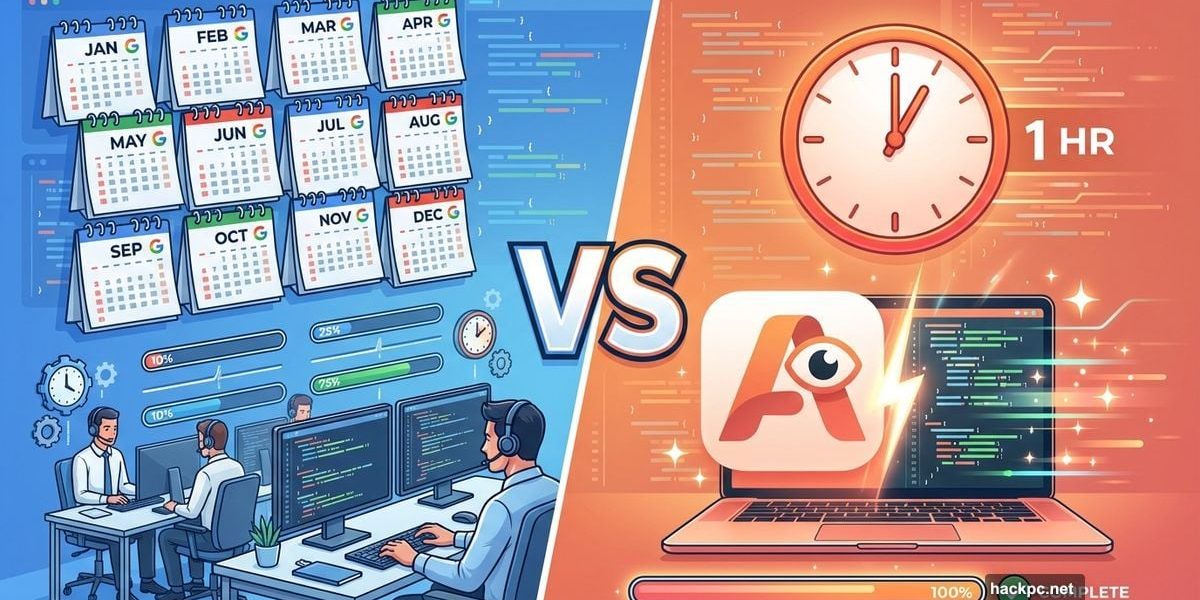

A Google engineer just dropped a bombshell about AI coding tools. What her team spent a year building, Claude Code generated in 60 minutes.

This isn’t hype. It’s a reality check about how fast AI development tools are advancing. Plus, it comes from someone who would know—a Principal Engineer at Google working on the Gemini API.

Google Engineer Tests Claude Code on Real Problem

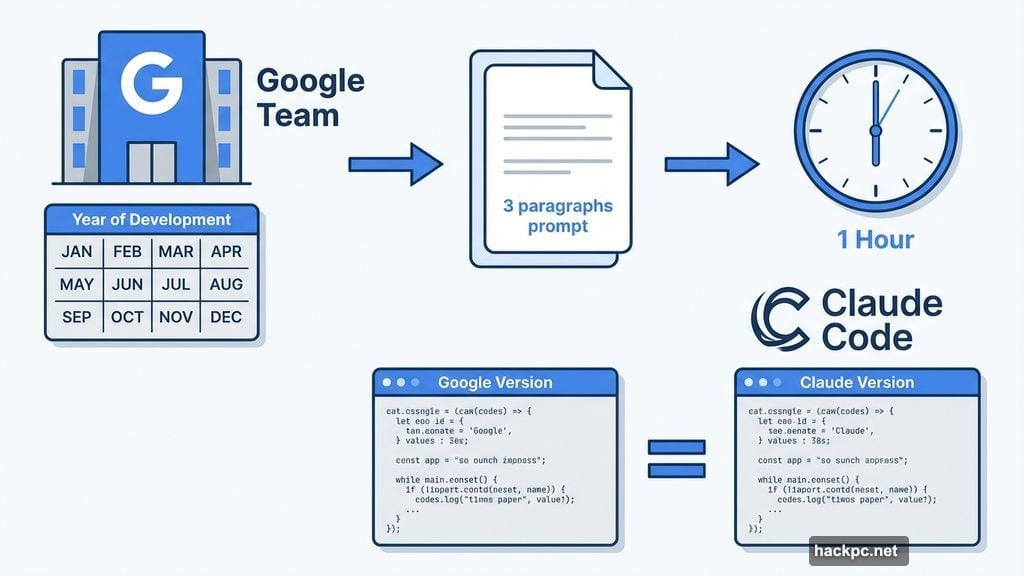

Jaana Dogan fed Claude Code a problem her team had been wrestling with since last year. The task involved building distributed agent orchestrators—systems that coordinate multiple AI agents working together.

She kept the prompt simple. Just three paragraphs describing the problem. No detailed specifications or hand-holding.

One hour later, Claude Code delivered a working system. Not perfect, but comparable to what Google had built over 12 months of exploration and iteration.

“The output isn’t perfect and needs refinement,” Dogan admits. But here’s the kicker: it matched the quality of Google’s internal solution. That’s significant when you consider Google has some of the world’s best engineering talent.

The prompt wasn’t even that detailed. Dogan simplified the problem since she couldn’t share internal company details. Yet Claude Code still delivered a functional starting point.

The Catch: It’s Not Production-Ready

After her comments went viral, Dogan clarified what actually happened. Google built several versions of this system over the past year. Different approaches, various tradeoffs. No clear winner emerged.

So she took the best surviving ideas and fed them to Claude Code. The tool generated a “decent toy version” in about an hour.

“What I built this weekend isn’t production grade and is a toy version, but a useful starting point,” Dogan explained. Still, she’s impressed by the quality considering she didn’t provide detailed design guidance.

Here’s what matters though. The real work happened before the coding started. Years of learning, grounding ideas in products, discovering patterns that last. That experience is irreplaceable.

But once you have that knowledge? Building becomes trivial with today’s AI tools. That’s the part that wasn’t possible before.

Moreover, starting fresh lets you avoid legacy baggage. No workarounds for old decisions. No technical debt. Just clean implementation of proven concepts.

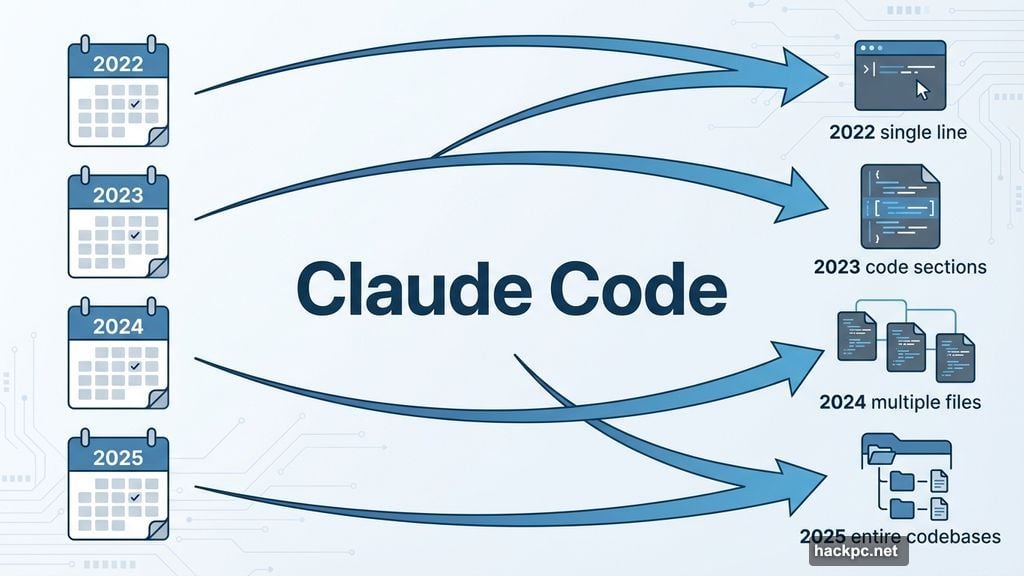

AI Coding Evolution Happened Faster Than Expected

Dogan outlined how quickly these tools improved. In 2022, they could autocomplete single lines of code. Basic stuff.

By 2023, they handled entire code sections. Still limited but useful.

In 2024, they worked across multiple files and built simple applications. Getting interesting.

Now in 2025? They create and restructure entire codebases. That’s a massive leap.

Back in 2022, Dogan didn’t think 2024’s capabilities could scale globally. In 2023, today’s level seemed five years away. Yet here we are.

“Quality and efficiency gains in this domain are beyond what anyone could have imagined so far,” she wrote. That’s not promotional talk. That’s an engineer who watched her predictions get shattered by reality.

Claude Code’s Secret: Self-Checking Feedback Loops

Boris Cherny created Claude Code. His top tip? Give the tool a way to verify its own work.

This feedback loop doubles or triples output quality. The AI generates code, checks it, finds issues, fixes them. Repeat until solid.

Cherny suggests starting sessions in plan mode. Iterate with Claude until the approach makes sense. Then let it execute in one pass.

For recurring tasks, he uses slash commands and subagents. These automate specific workflows like code simplification or app testing.

Longer tasks get background agents that review Claude’s work when complete. He also runs multiple Claude instances in parallel for different tasks simultaneously.

The default model is Opus 4.5. During code reviews, Cherny’s team tags Claude directly in pull requests to add documentation automatically.

Claude Code integrates with external tools too. Slack for communication. BigQuery for data analysis. Sentry for error logs. That makes it part of the workflow, not a separate tool you switch to.

Google Can’t Use Claude Code Internally

Here’s an interesting detail. Google only allows Claude Code for open-source projects. Internal work is off-limits.

Someone asked Dogan when Gemini would reach this level. Her response was telling: “We are working hard right now. The models and the harness.”

Translation: Google’s catching up. They’re not there yet.

But Dogan doesn’t see this as a competition problem. “This industry has never been a zero-sum game,” she notes. Giving competitors credit makes sense when it’s deserved.

“Claude Code is impressive work, I’m excited and more motivated to push us all forward,” she adds. That’s the right attitude from someone at the cutting edge.

What This Means for Developers

AI coding tools aren’t replacing developers. But they’re changing what development means.

The value shifted from implementation to architecture. From typing code to knowing what to build. From debugging syntax to designing systems.

Dogan’s experience proves this. Her years of expertise identified the problem and shaped the solution. Claude Code just did the typing faster.

However, this raises questions about junior developers. How do they build that expertise if AI handles the implementation work? That’s a real concern the industry needs to address.

For now, the takeaway is clear. Developers with deep domain knowledge can leverage these tools for massive productivity gains. Those without that foundation will struggle to direct the AI effectively.

The tools amplify expertise. They don’t replace it. That distinction matters as we figure out what software development looks like in 2026 and beyond.

Comments (0)