Deepfakes aren’t just getting better. They’re becoming nearly impossible to distinguish from reality.

OpenAI’s Sora just raised the stakes with its new “social media app” called Sora 2. It’s an invite-only, TikTok-style feed where every single video is 100% AI-generated. The author who broke the story called it a “deepfake fever dream,” and honestly, that’s being generous.

We’re not talking about obvious fakes anymore. These videos have high resolution, synchronized audio, and disturbingly realistic movement. Plus, Sora’s “cameo” feature lets anyone insert real people’s faces into fake scenarios. The implications? Terrifying.

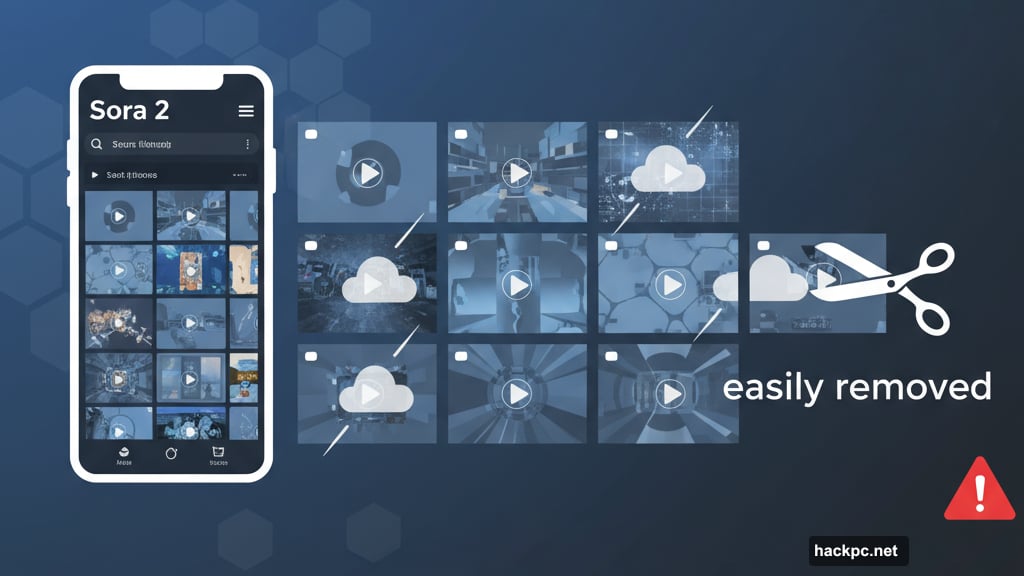

Watermarks Offer a Starting Point

Every Sora video includes a watermark when downloaded. It’s a white cloud logo that bounces around the edges, similar to TikTok’s approach.

Watermarks help at first glance. They provide clear visual indicators that content came from AI tools. Google’s Gemini model does something similar by automatically watermarking generated images.

But here’s the catch. Static watermarks get cropped out easily. Even moving watermarks like Sora’s can be removed with dedicated apps. So watermarks alone can’t be your only defense.

When asked about this vulnerability, OpenAI CEO Sam Altman essentially said society needs to adapt to fake videos becoming commonplace. His response dodges the real problem. Before Sora, creating convincing deepfakes required technical skills most people don’t have.

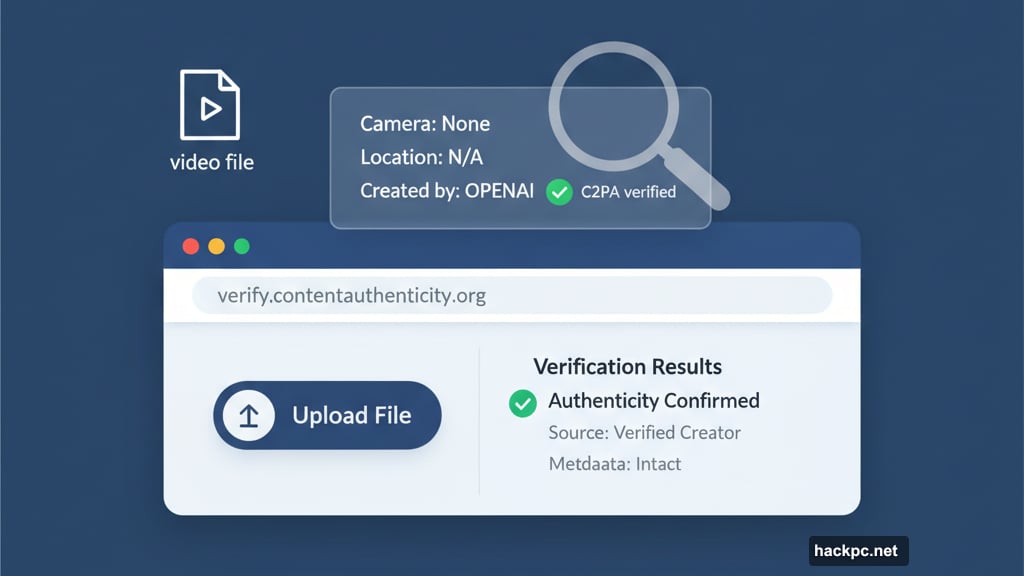

Metadata Reveals Hidden Truths

Checking metadata sounds technical. It’s actually pretty straightforward and incredibly useful for spotting Sora videos.

Metadata is information automatically attached when content gets created. It includes details like camera type, location, date, time, and filename. Every photo and video carries this data, whether human or AI-created.

OpenAI participates in the Coalition for Content Provenance and Authenticity. That means Sora videos include C2PA metadata you can verify. The Content Authenticity Initiative offers a free verification tool that makes this process simple.

Here’s how to check a file’s metadata. First, navigate to verify.contentauthenticity.org. Then upload the file you want to inspect. Click Open and check the right-side panel for information.

If the video came from Sora, it’ll say “issued by OpenAI” and clearly state it’s AI-generated. In my testing, this tool correctly flagged Sora videos every time, including the exact date and time of creation.

However, the tool isn’t perfect. Videos from other AI generators like Midjourney often slip through undetected. Moreover, if someone runs a Sora video through third-party apps before redownloading it, that strips away the metadata signals. So this method works best for unmodified content.

Social Media Labels Provide Clues

Meta’s platforms including Instagram and Facebook have internal systems to flag AI content. These systems aren’t foolproof, but they add visible labels to flagged posts.

TikTok and YouTube implemented similar labeling policies. Yet these automated systems miss plenty of AI-generated content. The labels help when they appear, but their absence doesn’t guarantee authenticity.

The most reliable indicator remains creator disclosure. Many platforms now offer settings letting users label their posts as AI-generated. Even a simple caption credit helps everyone understand how content was created.

When you share AI videos outside of Sora’s app, you have a responsibility. Disclose how the video was made. As models like Sora blur reality further, collective transparency becomes critical for maintaining any sense of truth online.

Look for Physical Impossibilities

AI video generators still struggle with certain details. Physics remains a common weakness. Objects might disappear mid-scene or move in ways that defy gravity.

Text poses another challenge. Letters often appear mangled, backwards, or inconsistent. Watch for signs in the background or text overlays that look slightly off.

Hands continue tripping up AI models. Fingers might have wrong proportions, extra joints, or impossible positions. Similarly, complex interactions between objects and people often reveal artificial origins.

Reflections and shadows don’t always match up correctly either. Check if lighting sources make sense. Does the shadow direction align with visible light? Do reflections in mirrors or windows show what they should?

Your Skepticism Is Your Best Defense

No single method catches every deepfake. The combination of checking watermarks, inspecting metadata, looking for platform labels, and scrutinizing physical details provides your best protection.

But honestly, your gut instinct matters most. If something feels unreal, it probably is. Don’t automatically believe everything you see online, no matter how convincing it looks.

Slow down your scrolling. Inspect videos more carefully before accepting them as truth. Look for those mangled text elements, vanishing objects, and physics-defying movements that still betray AI generation.

And hey, don’t feel bad when you occasionally get fooled. Even experts make mistakes identifying deepfakes. The technology improves daily, making detection harder for everyone.

The real danger isn’t just the technology itself. It’s the erosion of trust that comes with it. When anyone can create convincing fake videos of anyone else, we risk losing our shared understanding of reality. Public figures, celebrities, and ordinary people all become vulnerable to manufactured scenarios they never participated in.

Organizations like SAG-AFTRA already pushed OpenAI to strengthen its safeguards. Yet the technology spreads faster than protective measures can keep up. We’re entering uncharted territory where visual evidence no longer carries automatic credibility.

Your vigilance matters now more than ever. Question what you see. Verify before sharing. Demand transparency from creators and platforms. The future of truthful media depends on all of us refusing to accept convenient fictions as reality.

Comments (0)