AI-generated videos aren’t just getting better. They’re becoming nearly impossible to distinguish from reality.

Scroll through Instagram, TikTok, or YouTube today. That cute puppy video? Might be AI. The celebrity endorsement? Probably fake. The breaking news clip? Could be completely fabricated.

We’ve entered an era where anyone with a smartphone can create convincing fake videos of anyone doing anything. Tools like OpenAI’s Sora and Google’s Veo 3 erased the obvious telltale signs that once made deepfakes easy to spot.

So how do you protect yourself from being fooled? Let’s break down the practical methods that actually work.

Why Modern AI Videos Fool Everyone

Sora and similar tools produce videos that look shockingly real. High resolution video paired with synchronized audio creates content that passes the eye test.

The “cameo” feature makes things worse. It lets users insert anyone’s face into AI-generated scenes. That means your favorite celebrity could appear to endorse products they’ve never heard of. Public figures and regular people alike become unwilling stars in fabricated content.

Plus, these tools require zero technical skills. Download an app, upload a photo, describe what you want. The AI handles everything else. That accessibility democratized fake video creation in ways experts warned about for years.

Tech companies rushed to release competing models throughout 2025. Each new release pushed capabilities further. What seemed impossible last year became routine this year.

Check for the Watermark First

Every Sora video includes a watermark when downloaded. Look for the white cloud icon bouncing around the screen edges. It works like TikTok’s watermark system.

Watermarks provide the clearest visual indicator of AI content. Google’s Veo 3 automatically watermarks its videos too. When you spot that mark, you know AI created what you’re watching.

But watermarks aren’t foolproof. Static ones get cropped out easily. Even moving watermarks can be removed using specialized apps designed for that purpose.

So while watermarks help, don’t rely on them exclusively. Creators determined to deceive will remove them before sharing.

Dive Into the Metadata

Metadata sounds technical and boring. But checking it takes less than a minute and reveals exactly how content was created.

Every photo and video contains metadata. It includes details like creation date, location, camera type, and filename. AI-generated content often includes specific markers identifying its artificial origins.

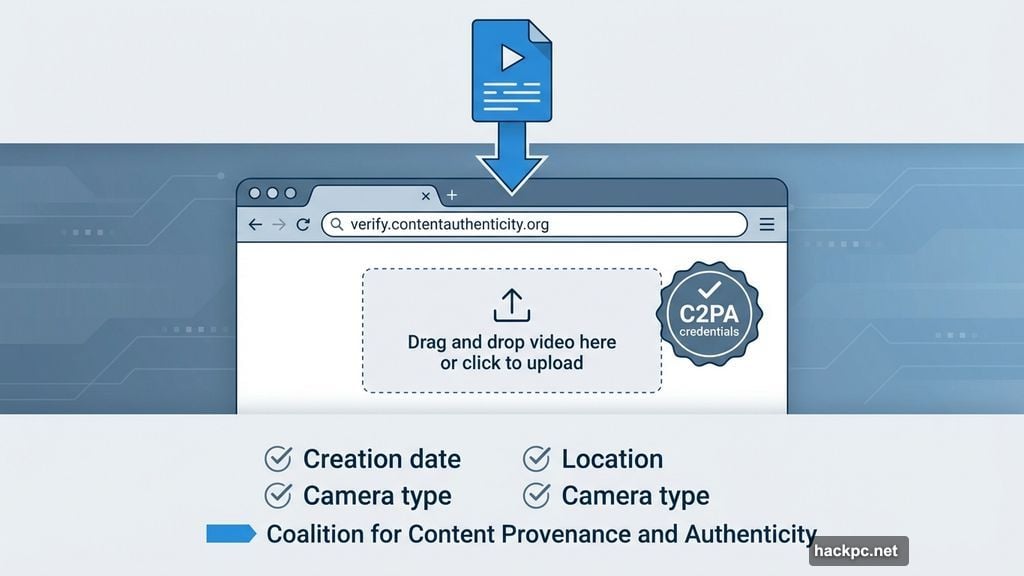

OpenAI participates in the Coalition for Content Provenance and Authenticity. That means Sora videos contain C2PA credentials proving they’re AI-generated.

How to Verify Video Metadata

The Content Authenticity Initiative offers a free verification tool. Here’s how to use it:

First, navigate to verify.contentauthenticity.org in your browser. Then upload the suspicious video file. Finally, check the right panel for content summary information.

Sora videos will show “issued by OpenAI” in their metadata. The tool also displays the creation date and confirms AI generation.

However, this method has limitations. Videos edited through third-party apps might strip metadata. Some AI tools don’t include C2PA credentials at all. I tested Midjourney videos and they didn’t get flagged.

Still, checking metadata takes 30 seconds. It’s worth trying before trusting suspicious content.

Watch for Platform Labels

Meta’s platforms like Instagram and Facebook flag AI content automatically. Their systems aren’t perfect, but labeled posts clearly identify AI origins.

TikTok and YouTube implemented similar labeling policies. When platforms detect AI content, they add visible markers to posts.

These automated systems catch some AI videos but miss plenty. Platform detection improves constantly, yet determined creators find workarounds.

The most reliable method remains creator disclosure. Responsible users label their AI content voluntarily, either through platform tools or simple captions.

We all share responsibility here. If you create AI content, disclose it. If you share someone else’s AI content, mention its origins. Small actions prevent massive confusion.

Train Your Eye for Visual Glitches

AI video generators still make mistakes. Text often appears mangled or backwards. Objects sometimes disappear between frames. Physics breaks down in subtle ways.

Watch hands carefully. AI struggles with fingers, often adding extra digits or creating unnatural positions. Check reflections and shadows too. They frequently don’t match the scene lighting.

Motion can reveal AI origins as well. Objects might float unnaturally or move in impossible ways. Water and fire effects often look slightly off, lacking the random chaos of real elements.

These tells become less obvious as models improve. What worked to spot fakes six months ago might not work today. That’s why you need multiple verification methods working together.

Stay Skeptical About Everything

No single method catches every deepfake. Watermarks get removed. Metadata gets stripped. Platform labels miss content. Visual tells become harder to spot.

Your best defense? Healthy skepticism about everything you see online.

If something seems too perfect, question it. If a video shows someone acting completely out of character, investigate further. When breaking news appears only on social media without mainstream coverage, wait for confirmation.

Even experts get fooled sometimes. Don’t feel bad if you fall for convincing fakes. Just commit to verifying before sharing.

The Bigger Problem Nobody’s Solving

Tech companies keep releasing more powerful AI video tools. Meanwhile, detection methods lag behind generation capabilities.

OpenAI CEO Sam Altman suggested society needs to adapt to fake videos being everywhere. That’s true but insufficient. We need better tools, clearer standards, and stronger accountability.

Until then, we’re all playing defense against an ever-growing flood of fabricated content. The responsibility falls on creators to label their work, platforms to detect and flag AI content, and viewers to verify before trusting.

It’s exhausting. But it’s necessary. The alternative is a world where we can’t trust anything we see. We’re not quite there yet, but we’re getting dangerously close.

Stay vigilant. Question everything. Verify before sharing. That’s the reality of consuming media in 2025.

Comments (0)