AI image generators aren’t all the same anymore. Each one has its own style, quirks, and strengths.

Professional creators now treat AI model selection like choosing camera lenses or brushes. The tool shapes the final result. So picking the right one isn’t just technical anymore. It’s part of the creative process itself.

This shift reveals how far generative AI has come in 2025. We’ve moved from “can this even work?” to “which one works best for this specific task?”

Every AI Model Has Its Own Style

Creators describe AI models using terms like “personalities.” That’s not literal, obviously. These tools aren’t human.

But the term fits. Each model handles specific tasks differently. Some excel at photorealism. Others lean cinematic or surreal. And users notice these tendencies quickly.

Tiffany Kyazze runs AI Flow Club, a community teaching people to use AI tools. She sees creators humanize their software constantly. They call models “the creative one” or “the detailed one.” That’s not random. It reflects real differences in how these tools behave.

Dave Clark directs films using AI at Promise AI. He thinks of each model as interpreting the world differently. Some lean cinematic. Others feel dreamlike. So understanding each model’s tendencies helps him translate creative vision into effective prompts.

This personality concept didn’t exist two years ago. Back then, we had fewer choices. Now? The market is crowded with options from Google, OpenAI, Adobe, Runway, Pika, and Luma.

Training Data Shapes Model Behavior

Why do these personalities emerge? Part of it comes from training data.

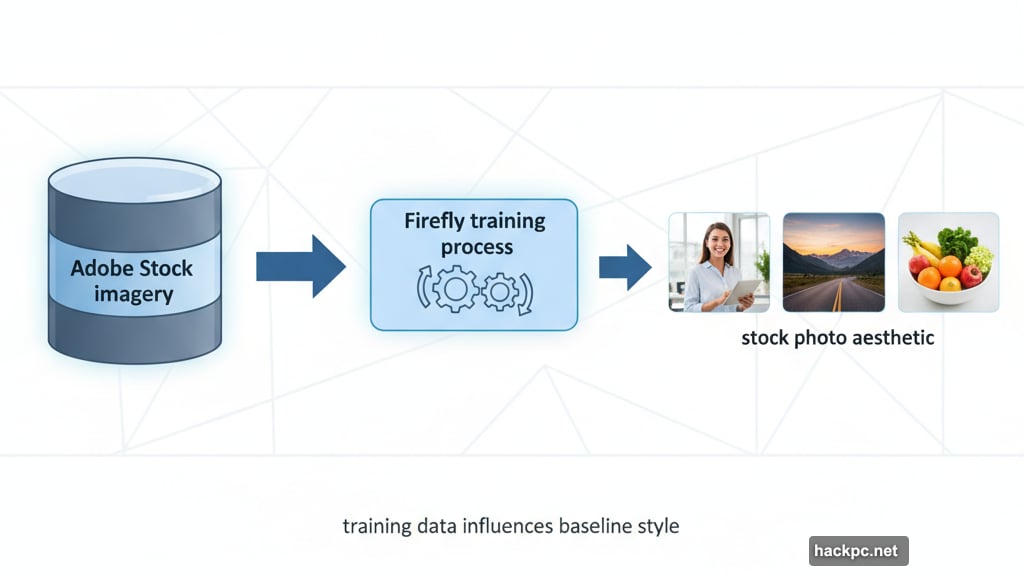

Alexandru Costin leads generative AI at Adobe. He explains that models can be “taught” specific styles during late-stage training. Some companies create opinionated models that favor certain aesthetics. Others try staying neutral.

Plus, the source material matters. Adobe’s Firefly models trained on licensed Adobe Stock imagery. That’s why Firefly outputs often look like stock photos. Adobe is working to fix that, but it shows how training data influences baseline style.

Different companies make different choices about what data they use and how they fine-tune models. Those decisions create the distinct personalities creators notice.

So it’s not just marketing hype. Real technical differences create these tendencies.

What Each Major Model Does Best

Creators I spoke with generally agreed on which models excel at what. Here’s the breakdown based on their experiences:

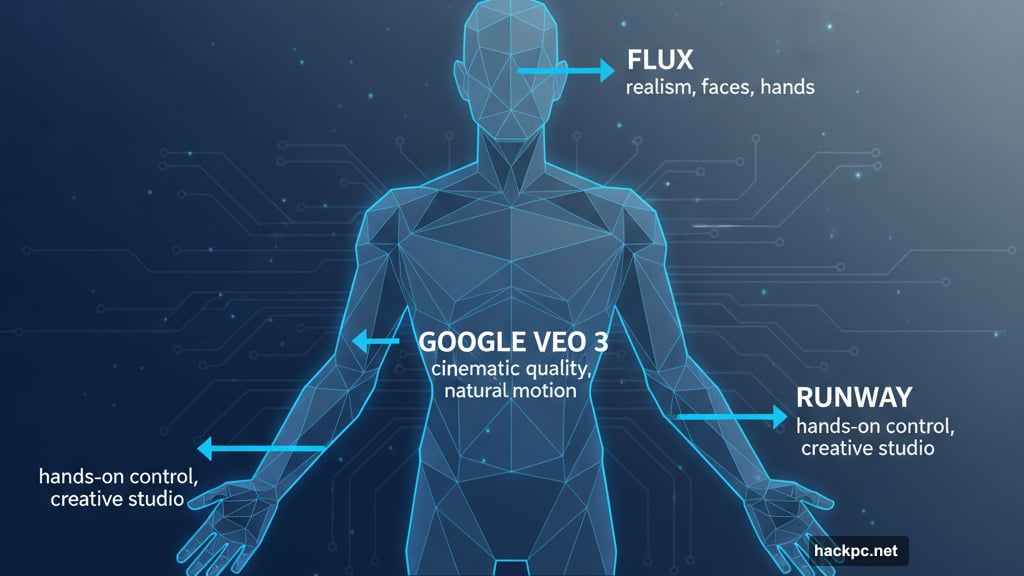

Google Veo 3 earned a reputation for cinematic quality. Motion looks natural. Output resolution impresses. It’s a go-to for video work that needs polish.

Flux handles realism well, especially human features. Faces, hands, and body proportions tend to look correct more often than with other models.

Runway functions as a full creative studio. It offers tons of hands-on control for creators who want precise adjustments. That flexibility comes with a learning curve though.

Sora works great for ideation and exploration. Its social media presence also makes it popular for meme creation. But it’s less reliable for finished professional work.

Midjourney remains the artistic choice. Its outputs lean stylized and creative. If you want photorealism, look elsewhere. If you want art, Midjourney delivers.

Google Imagen 3 (formerly called “nano banana” during testing) excels at character consistency. That makes it valuable for e-commerce and social media content needing brand coherence.

Adobe Firefly Image Model 5 produces commercially safe results. If you need content cleared for professional use without licensing headaches, Firefly is the practical choice.

These reputations can shift as companies release updates. A model weak at something today might improve dramatically next month. That’s part of why staying flexible matters.

Using Multiple Models Gets Better Results

Bouncing between different AI tools might sound tedious. But professional creators swear by it.

Clark’s team used multiple AI models for a short film called “My Friend, Zeph.” They mixed Adobe Firefly, Google Veo 3.1, and Luma Ray3 with traditional tools like Photoshop and Premiere Pro.

This hybrid approach let them visualize the story world earlier. They iterated faster. And they made stronger creative choices before shooting anything physical.

Kyazze sees similar patterns across her community. The creators getting the best results don’t stick loyally to one platform. Instead, they stay “tool-agnostic and goal-focused.”

That means leveraging each model’s actual strengths rather than forcing one tool to do everything. You get better results because you’re using the right tool for each specific part of your project.

For example, you might generate initial concepts in Midjourney, refine character designs in Imagen 3, create video clips in Veo 3, then composite everything in Premiere Pro. Each tool contributes what it does best.

This workflow requires learning multiple interfaces. But the quality improvement makes it worthwhile for serious work.

AI Loyalty Misses the Point

Some creators develop strong preferences for specific platforms. That brand loyalty might feel comforting, but it limits results.

Kyazze puts it bluntly: the real benefit of multimodel workflows is not forcing one tool to do everything. You’re leveraging actual strengths instead.

Plus, the AI landscape changes fast. A model’s personality can evolve with updates. Something terrible at a specific task might improve dramatically in the next version.

Staying flexible means you can adapt as the tools improve. You won’t waste time fighting against a tool’s limitations when a better option exists for that specific task.

This doesn’t mean you need subscriptions to every platform. But knowing what each model does best helps you choose wisely when a project demands specific results.

The Human Still Drives Everything

All this talk about AI personalities and choosing the right model might make it sound like the tools do the creative work. They don’t.

Clark emphasizes that human expression drives outcomes. The artist’s personality and creative point of view matter most. AI just expands what’s possible.

It’s not about replacing traditional creative processes. Instead, it’s about bringing imagination to the screen faster than before. The vision still comes from people.

Adapting to each model’s strengths and weaknesses is just smart workflow design. You wouldn’t use a wide-angle lens for a portrait or a fine detail brush for a wall mural. Same logic applies to AI models.

Understanding these “personalities” helps creators work more efficiently. They spend less time fighting tools and more time refining ideas.

The generative AI field will keep evolving. New models will emerge. Existing ones will improve. These personalities will shift and change.

But the core principle stays constant: pick the right tool for the job. Your creative vision deserves it.

Comments (0)