Two Chrome extensions with nearly a million users just got caught red-handed stealing AI chat history. Worse yet, one carries Google’s “Featured” badge.

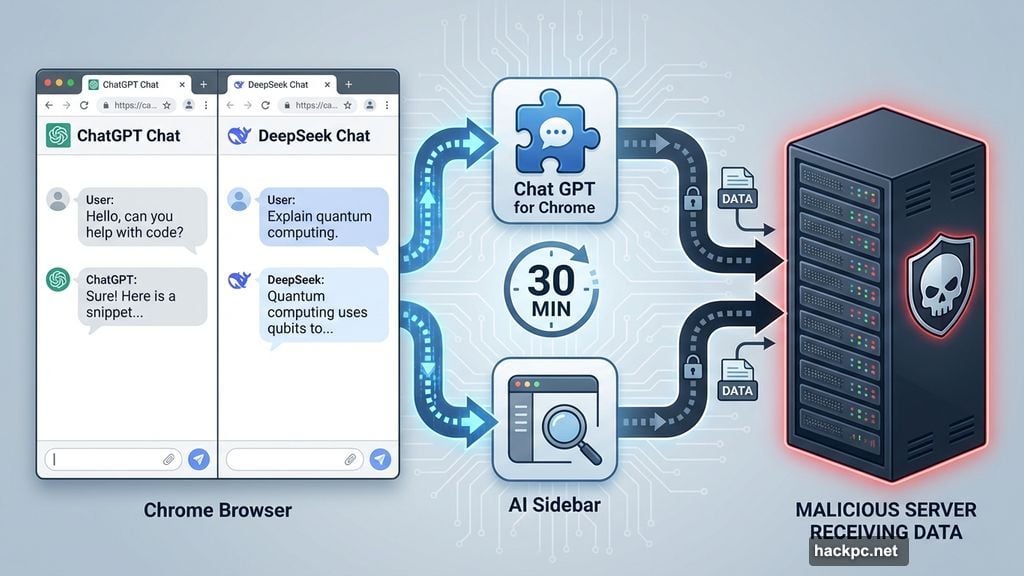

Security researchers at OX Security found something disturbing. Extensions that looked legitimate were quietly siphoning off ChatGPT and DeepSeek conversations every 30 minutes. Plus, they grabbed your browsing activity while they were at it.

Here’s the kicker. Google still hasn’t taken them down. The extensions remain live in the Chrome Web Store despite researchers alerting Google days ago.

Which Extensions Are Stealing Your Data

Two specific extensions are behind this mess:

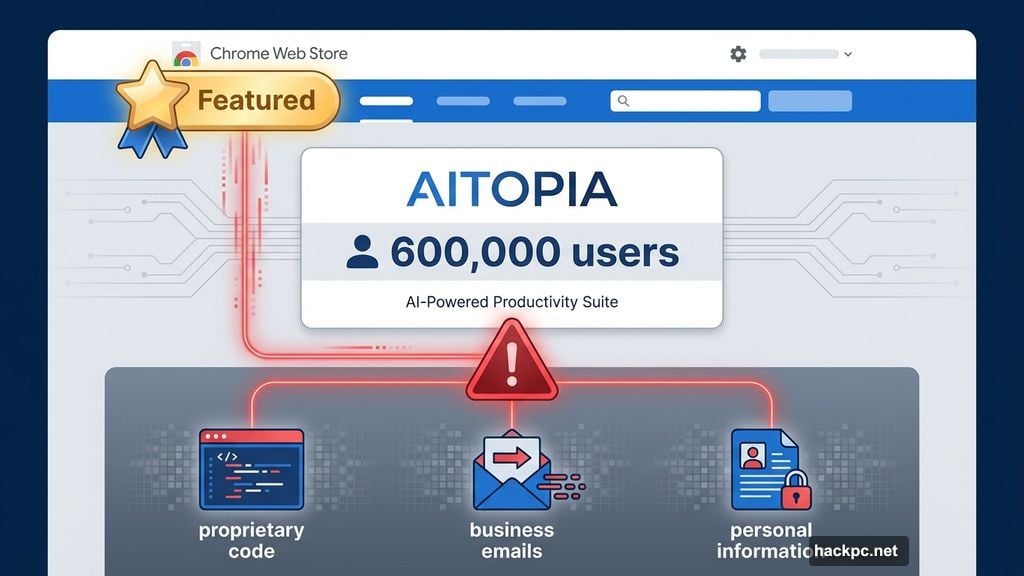

Chat GPT for Chrome with GPT-5, Claude Sonnet & DeepSeek AI brought in over 600,000 users. It even sports Google’s coveted “Featured” badge. That badge is supposed to signal trustworthiness. Clearly, it failed here.

AI Sidebar with Deepseek, ChatGPT, Claude, and more attracted another 300,000 users. Same operation, slightly different branding.

Both extensions claimed to be from a company called AITOPIA. They promised a handy sidebar for chatting with large language models on any website. The tools actually worked as advertised. But that legitimate functionality masked their true purpose.

How the Spyware Actually Worked

The extensions operated on a simple schedule. Every 30 minutes, they exfiltrated your chat history with AI services. They grabbed conversations with ChatGPT and DeepSeek specifically. Then they bundled in your browsing activity for good measure.

Think about what you’ve asked AI assistants recently. Maybe you debugged proprietary code. Perhaps you drafted sensitive business emails. You might have shared personal information while troubleshooting problems.

All of that data left your browser. It traveled to servers controlled by whoever built these extensions. And the extensions ran quietly in the background the entire time.

Why This Matters More Than You Think

OX researchers weren’t mincing words about the risk. They pointed out that AI conversations often contain incredibly sensitive material. Proprietary code, personal details, confidential business information – it all goes into those chat boxes.

“This data can be weaponized for corporate espionage, identity theft, targeted phishing campaigns, or sold on underground forums,” the researchers explained.

Imagine you work at a company with valuable intellectual property. An employee installs one of these extensions. Suddenly, every AI-assisted conversation about your products, strategies, or customer data gets copied to an unknown third party.

The damage compounds quickly. Corporate secrets leak. Personal information gets harvested. Phishing attackers gain detailed intelligence about potential targets. And it all stems from what looked like a helpful productivity tool.

Google’s Featured Badge Failed Spectacularly

The “Featured” badge deserves special attention here. Google awards this badge to extensions it considers high-quality and trustworthy. Users see that badge and assume Google vetted the extension thoroughly.

Not this time. An extension actively stealing user data carried Google’s stamp of approval. That raises serious questions about Google’s review process.

Moreover, researchers contacted Google about their findings. Days passed. The extensions remain available. Users can still install them right now. Google’s response team continues reviewing the issue, but that review should have taken hours, not days.

This Keeps Happening

Cybersecurity researchers from Koi previously found another Chrome extension doing the same thing. That one had six million users, a 4.7-star rating, and yes, a Featured badge from Google. It too was harvesting AI chat conversations.

The pattern is clear. Malicious developers know they can slip spyware past Google’s reviews. They build functional extensions that do what they promise. Then they hide data collection in the background. Users install them based on ratings and badges. Google either doesn’t catch the malicious code or takes forever to respond when researchers report it.

So the cycle repeats. Extensions get installed. Data gets stolen. Eventually someone notices. Google slowly removes them. But by then, hundreds of thousands or millions of users already installed the malware.

What You Need to Do Right Now

First, remove both extensions immediately if you installed them. Check your Chrome extensions list and delete anything matching those names.

Second, change your ChatGPT and DeepSeek passwords. Assume your conversations were captured. Review what sensitive information you shared in those chats over the past months.

Third, notify your IT security team if you installed these at work. Your employer needs to assess what data might have leaked and how to respond.

Fourth, stop trusting Chrome extension badges blindly. The Featured badge means nothing if Google can’t catch obvious spyware. High ratings don’t prove safety either – both these extensions had good ratings.

Instead, research extensions before installing them. Look for independent security reviews. Check how long they’ve existed and who develops them. Be skeptical of extensions that seem too good to be true.

Google Needs to Fix This Process

The real issue isn’t these two extensions. It’s that malicious extensions keep getting through Google’s review process and earning Featured badges.

Google claims to review extensions before allowing them in the Chrome Web Store. That review should catch extensions exfiltrating user data every 30 minutes. It’s not subtle behavior. The code exists there to be found.

Either Google isn’t reviewing extensions thoroughly enough, or their review process can’t detect common malware patterns. Neither answer inspires confidence.

Plus, when researchers report problems, Google needs to act faster. Days of deliberation while nearly a million users remain exposed to active data theft isn’t acceptable. Google should have an expedited process for verified security reports about Featured extensions.

The Chrome Web Store needs fundamental changes. Stricter initial reviews. Ongoing monitoring of extension behavior. Faster response to security reports. And definitely more scrutiny before awarding Featured badges.

Until Google implements those changes, users bear the responsibility for protecting themselves. That’s a terrible outcome, but it’s the reality we face today.

Remove those extensions now. Your AI chat history isn’t as private as you thought.

Comments (0)