Meta knows scam ads flood its platforms. Internal documents prove it. Yet the company lets them run anyway because they’re wildly profitable.

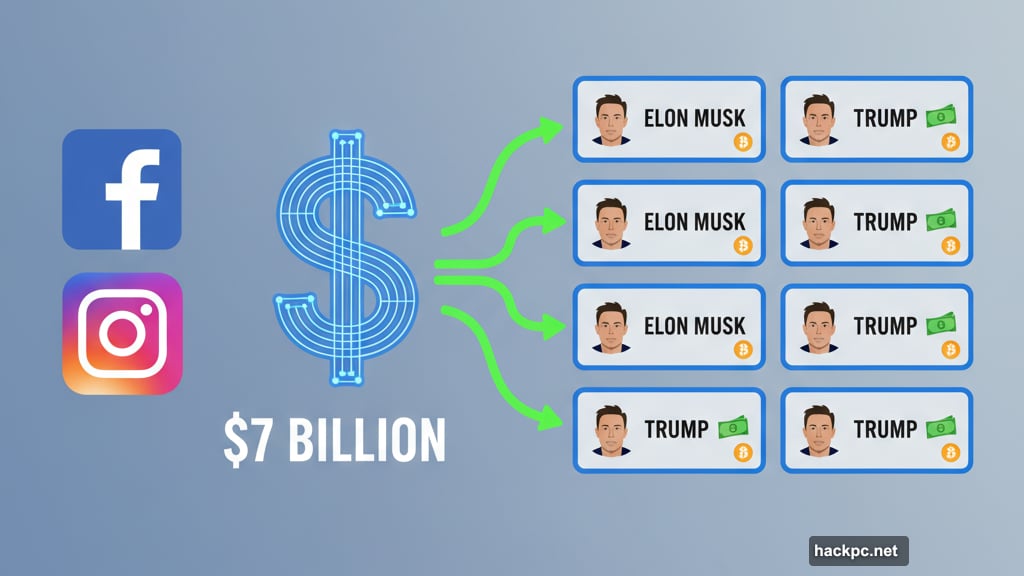

Reuters exposed something stunning this week. Meta platforms show users 15 billion scam ads daily. These aren’t subtle schemes. They’re obvious frauds featuring deepfake Elon Musks hawking crypto and fake Trump stimulus checks.

The kicker? Meta’s own trust and safety team estimates one-third of US scams involve their platforms. So the company clearly understands the scope of this crisis.

The $7 Billion Reason Meta Won’t Stop Scams

Money talks. Meta reportedly earns $7 billion yearly from ads with obvious scam hallmarks. That’s ads falsely claiming to represent public figures or trusted brands.

Total revenue from scam ads and banned goods? Around $16 billion annually. That’s 10 percent of Meta’s entire yearly revenue.

Even massive fines barely dent profits this enormous. So Meta keeps the scam ads flowing while victims lose everything.

The Victims Can’t Afford These Losses

Americans reported $16 billion in scam losses to the FBI last year. Globally, scammers stole over $1 trillion in 2024, according to the Global Anti-Scam Alliance.

But here’s what those numbers don’t capture. Scam victims are often elderly people on fixed incomes. Young people searching for jobs. Immigrants navigating new systems. Anyone going through difficult transitions.

These folks can’t afford to lose a few hundred dollars. Yet that’s exactly what happens when they trust an ad on Facebook or Instagram.

Plus, many victims never report scams. The shame of falling for fraud keeps losses hidden. So real numbers are likely far higher than official reports suggest.

Meta Can Identify Scams But Chooses Not To

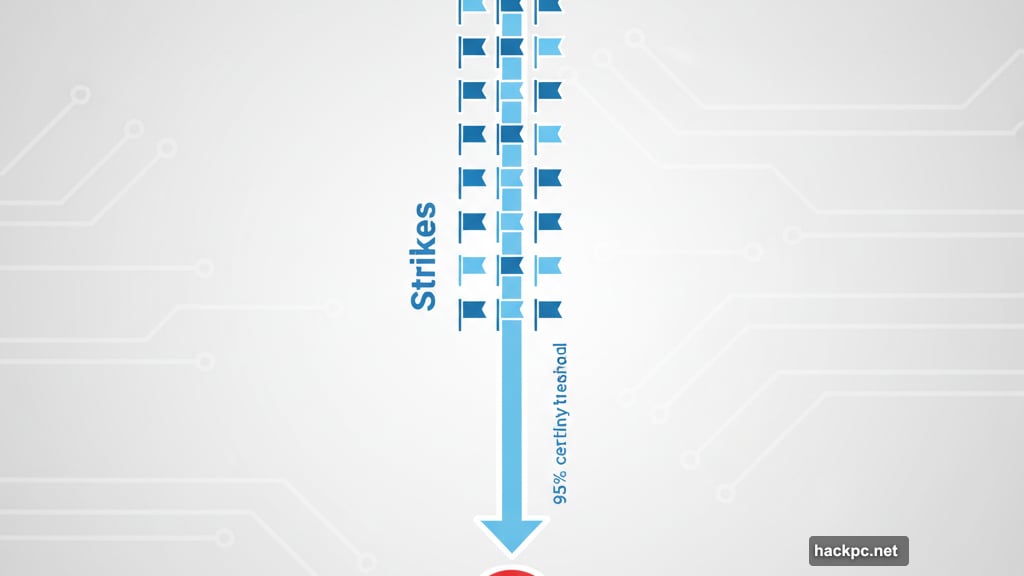

Meta’s systems can spot fraudulent ads easily. However, according to Reuters, the company requires 95 percent certainty before removing an ad.

That’s an absurdly high bar. Meanwhile, The Wall Street Journal reports Meta grants scam advertisers between eight and thirty-two “strikes” before banning accounts.

Translation? Even after Meta flags and removes scam ads, those same advertisers can run other ads for months. They can even post slightly modified versions of the same scam.

This isn’t incompetence. It’s a business decision that prioritizes revenue over user safety.

The Algorithm Makes Everything Worse

Click one scam ad and Meta’s algorithm shows you more. That’s how recommendation systems work across virtually all social platforms.

So the people most vulnerable to fraud get targeted with additional scams. Someone interested enough to click becomes a repeat target for fraudsters.

The online payment platform Zelle told The Wall Street Journal that half of reported scams involve Meta platforms. That’s a damning statistic for a company that claims to care about user safety.

Meta spokesperson Andy Stone disputed Reuters’ findings. He told The Verge the leaked documents present a “selective view” that distorts Meta’s approach to fraud.

Stone emphasized that many flagged ads weren’t actually violations. He also claimed user reports of scam ads declined over 50 percent in the last 15 months.

But if Meta’s catching more scams, why do they still earn billions from fraudulent advertising?

Scam Compounds Fuel a Global Industry

Southeast Asian scam operations grow more sophisticated daily. These aren’t lone fraudsters working from home. They’re transnational criminal organizations with heavy ties to online gambling.

Worse, these compounds run on human trafficking. Young people get lured by promises of white-collar jobs. Instead, they face conditions similar to slavery.

Threatened by violence, trafficking victims spend long days running romance and investment scams. They’re forced to target vulnerable people worldwide.

These criminal enterprises rapidly adopt automation and AI. Deepfakes of famous entrepreneurs promote fake investment opportunities. Synthetic videos of politicians tout nonexistent stimulus checks.

As technology improves, this problem will explode. Meta’s weak response essentially enables these criminal networks to thrive.

Three Changes Meta Must Make Immediately

First, lower the barrier for removing scam ads. Once an advertiser posts one scam, remove all their ads automatically. No more eight to thirty-two strikes nonsense.

Second, expand detection tactics. The watchdog group Tech Transparency Project easily identified scam ads using simple criteria. They checked for fake government benefits, FTC-identified scam tactics, and Better Business Bureau complaints.

If a tiny nonprofit outperforms Meta’s detection systems, something’s broken. Meta has the resources to implement far more sophisticated fraud detection.

Third, require advertiser verification. Only accounts using real, verified identities should purchase ads. This would cut down on deepfake ads and create paper trails for law enforcement.

These changes would cost Meta money. That’s exactly why they need regulatory pressure to implement them.

Governments Must Treat Meta as Complicit

Meta isn’t just a passive platform where scams happen to occur. The company actively profits from fraud while using deliberately weak detection standards.

That makes them complicit actors in the scam ecosystem. Governments should treat them accordingly.

The FTC already has authority to regulate “unfair or deceptive acts or practices.” They should require platforms to verify advertiser identities and review ads before they run.

Independent, third-party audits of online advertising systems should be mandatory. Platforms like Meta have proven they won’t self-regulate effectively.

Moreover, raise the fines. Current penalties are pocket change for a company earning billions from scam ads. Fines need to actually hurt Meta’s bottom line.

Consider creating a scam victims compensation fund financed by these fines. That would provide some justice for people who lost everything to fraudsters.

State Action Can Force Change

If the federal government won’t act, state legislatures should step up. They can pass laws mandating advertiser verification and third-party audits.

State attorneys general have power here too. They can launch consumer protection lawsuits under existing state consumer fraud and deceptive trade practices laws.

This isn’t partisan. Scams affect everyone regardless of political affiliation. Elderly conservatives lose money to fake Trump checks. Young progressives fall for employment scams.

Everyone has a stake in stopping this predatory industry. Politicians looking for bipartisan wins should jump on this issue immediately.

Meta’s Track Record Speaks Volumes

Remember Myanmar? In 2018, Facebook admitted it hadn’t done enough to prevent genocide on its platform. Myanmar is now a failed state and home to the very scam compounds whose ads make Meta billions.

Meta repeatedly shows that user wellbeing matters less than quarterly earnings. The company won’t change voluntarily.

So regulators and lawmakers must force change. If Meta’s role in genocide didn’t move officials to act, maybe billions sucked from vulnerable users’ pockets will.

Financial literacy campaigns won’t solve this. Telling victims to “be smarter” adds to their shame without addressing the root problem.

The solution requires holding Meta accountable for knowingly profiting from fraud. Period.

Comments (0)