Microsoft just dropped data on 37.5 million Copilot conversations. The results? People aren’t using AI for what Microsoft expected.

Sure, productivity tasks still happen. But the real story shows users turning to Copilot for deeply personal stuff. Health advice at 2 AM. Relationship guidance before Valentine’s Day. Late-night philosophical debates about existence.

This isn’t about writing emails anymore. It’s about AI becoming a digital confidant.

Nighttime Philosophy, Daytime Travel Planning

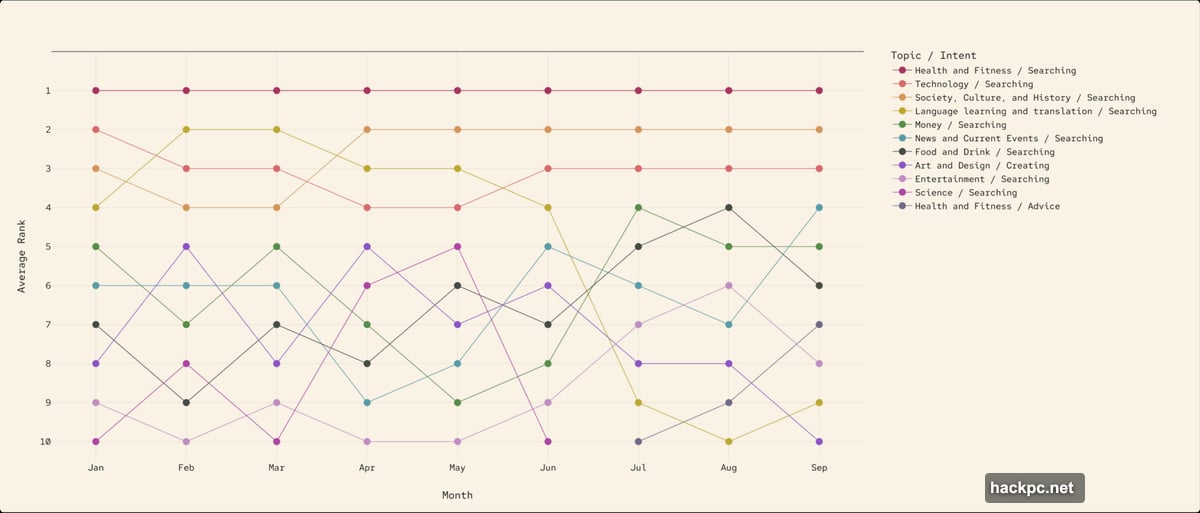

The data reveals clear patterns in how people use Copilot throughout the day.

During daylight hours, users focus on practical tasks. Travel planning dominates daytime conversations. People research destinations, compare flight prices, and map out itineraries. Meanwhile, work-related coding peaks during weekdays.

But when night falls, conversations shift dramatically. Philosophy and religion discussions spike after dark. Users ask existential questions. They explore spiritual topics. They seek meaning in ways they might not discuss with friends or family.

Plus, this pattern holds across time zones. It’s not just insomnia in one region. People worldwide use AI for deeper thinking when the world quiets down.

Mobile Health Queries Explode Around the Clock

Health became Copilot’s breakout category on mobile devices. Users don’t wait for doctor’s appointments anymore.

Instead, they ask Copilot about exercise routines at any hour. Questions about wellness practices flood in constantly. People want personalized fitness advice right when motivation strikes. Moreover, they seek guidance on building sustainable health habits.

The 24/7 nature of these conversations stands out. Traditional health resources close at night. But AI never sleeps. So users text Copilot about workout plans at midnight just as easily as noon.

However, Microsoft hasn’t specified how accurate these health responses are. That raises questions about medical misinformation risks. Yet the data shows people trust AI enough to ask sensitive health questions regularly.

Valentine’s Day Triggered Relationship Counseling Surge

February data tells a fascinating story about human behavior and AI assistance.

In the weeks before Valentine’s Day, relationship conversations exploded. Users asked Copilot for dating advice. They sought help navigating conflicts. They discussed personal development in the context of partnerships.

This wasn’t just “where should I take my date” surface-level stuff. The report indicates people used Copilot for genuine emotional guidance. They explored difficult relationship dynamics. They processed feelings about partnerships and personal growth.

Furthermore, personal development discussions stayed elevated beyond February. Once people discovered AI as a sounding board, they kept returning. That suggests users found real value in these conversations beyond holiday pressure.

Weekends Split: Coding Dies, Creative Projects Rise

Work-life patterns showed up clearly in August data. The divide between weekday and weekend usage reveals how people compartmentalize AI assistance.

During weekdays, coding conversations dominated. Developers asked Copilot for debugging help. They requested code reviews. They explored technical solutions to programming challenges. Basically, AI became the always-available senior developer.

But weekends flipped the script entirely. Coding questions dropped sharply. Instead, creative and personal projects surged. Users explored hobbies. They planned fun activities. They asked about entertainment and leisure topics.

So even knowledge workers maintain boundaries. They use AI as a work tool during the week. Then they reclaim it for personal enrichment on weekends. That’s healthier than the “always on” culture many feared AI would create.

From Information Tool to Life Counselor

Microsoft identified the report’s biggest trend: people increasingly seek guidance over facts.

Traditional search engines answer “what” and “how” questions. Users wanted information retrieval. They got links and summaries. Transaction complete.

Copilot conversations look different now. Users ask “should I” and “what if” questions. They explore decisions. They process emotions through dialogue. They want perspective, not just data.

This shift changes everything about AI assistant design. Fact-checking matters less when users seek emotional support. Empathy becomes more valuable than accuracy in many conversations. Plus, the AI needs to recognize when professional help beats chatbot advice.

Yet Microsoft hasn’t addressed the ethical complexity here. When does AI counseling help versus harm? What happens when users substitute Copilot for real therapy? The report celebrates engagement without examining responsibility.

What Microsoft Plans to Do With This Data

The company promises to use these insights for Copilot improvements. But specifics remain vague.

Better health information seems obvious. If millions ask wellness questions, accuracy becomes critical. Microsoft should partner with medical experts to ensure safe guidance. Otherwise, they risk spreading health misinformation at scale.

Emotional intelligence upgrades also make sense. Since users want counseling, not just facts, Copilot needs better conversational nuance. It should recognize emotional context. It should know when to suggest professional help instead of offering AI platitudes.

Finally, time-aware responses could improve user experience. If people discuss philosophy at night and travel during day, context-appropriate suggestions would feel more natural. The AI could adapt its tone and suggestions based on when users reach out.

The Privacy Question Nobody Answered

Microsoft analyzed 37.5 million “de-identified” conversations. That sounds reassuring until you think about it.

What does “de-identified” actually mean? Did Microsoft remove names but keep conversation content? Can they reverse the anonymization? How long do they store this deeply personal data?

Users shared health concerns. They discussed relationship problems. They explored religious doubts. These conversations contain incredibly sensitive information. Yet Microsoft’s transparency report lacks detail about data handling.

Moreover, this data powers product improvements. So Microsoft has financial incentive to collect and analyze as much as possible. That creates tension between user privacy and corporate interests. The report celebrates insights without acknowledging this fundamental conflict.

AI as Companion Raises Uncomfortable Questions

The data reveals something Microsoft might not want to admit: people are lonely.

Turning to AI for health advice, relationship guidance, and philosophical discussions suggests humans lack these conversations elsewhere. Maybe they fear judgment from friends. Maybe they lack access to therapists. Maybe late-night thoughts feel too vulnerable to share.

Whatever the reason, AI fills a gap in human connection. That’s simultaneously helpful and troubling. Helpful because people get support they might not find elsewhere. Troubling because we’re outsourcing intimate conversations to algorithms.

Furthermore, AI companionship could reduce motivation to build human relationships. Why navigate messy human emotions when AI never judges, never tires, never demands reciprocity? The convenience might accelerate social isolation rather than alleviate it.

Microsoft’s report treats increased engagement as pure success. But maybe we should question whether AI relationships indicate societal health or dysfunction.

The Reality of AI in Daily Life

This data snapshot shows AI embedding itself into human routines. Not as science fiction predicted, but in quieter, more intimate ways.

People don’t use Copilot to control smart homes or book entire vacations autonomously. Instead, they use it as a thinking partner. They process decisions. They seek validation. They explore ideas before committing.

That’s probably healthier than either utopian or dystopian AI predictions. It’s not revolutionary. It’s not terrifying. It’s just another tool humans bend toward their needs—which currently include connection, guidance, and late-night philosophical pondering.

Microsoft’s insights matter because they show what people actually want from AI. Not productivity hacks. Not automation of everything. Just someone—or something—that listens when they need to talk.

Comments (0)