French AI lab Mistral just launched two coding models and a command-line tool. The timing isn’t subtle.

Vibe-coding exploded this year. Cursor grabbed developers’ attention by letting them describe what they want in plain English. Supabase and other tools followed suit. Now Mistral wants its share of that market.

The company released Devstral 2 and Devstral Small today. Plus, they shipped Mistral Vibe, a CLI tool that turns natural language into code actions. All three products aim at professional developers tired of context-switching between their editor and AI chat windows.

What Makes Devstral 2 Different

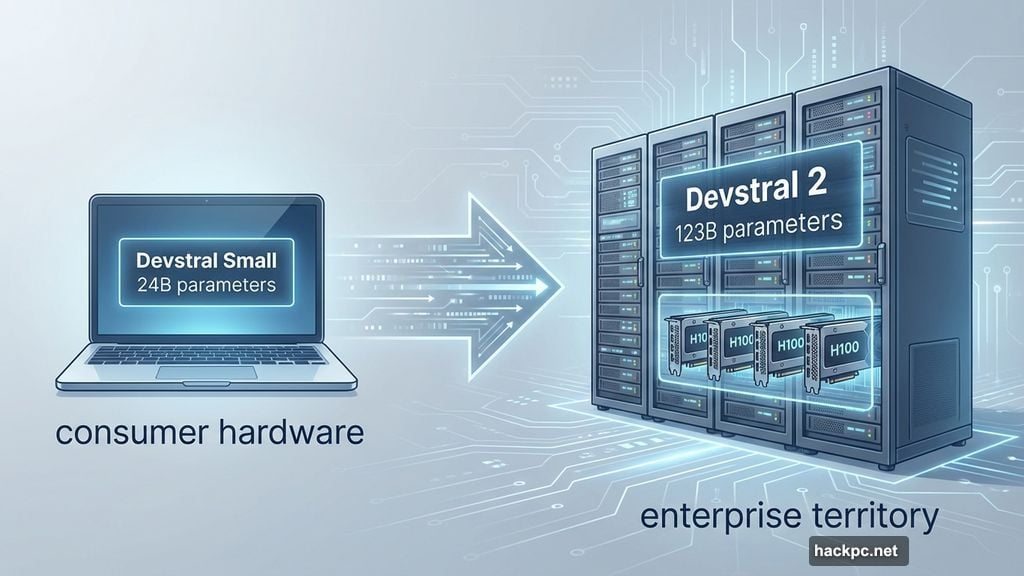

Size matters here. Devstral 2 weighs in at 123 billion parameters. That’s massive compared to most coding models.

The trade-off? You’ll need serious hardware. Four H100 GPUs minimum for deployment. That puts it firmly in enterprise territory, not hobbyist land.

But Mistral learned from Le Chat, their conversational AI assistant. Devstral 2 remembers context across sessions. It scans your file structure, checks Git status, and uses that information to guide suggestions. So it’s not just completing code snippets. It understands your project’s architecture.

That context awareness distinguishes Mistral’s approach from simpler autocomplete tools. Your codebase history informs every suggestion. Previously written patterns influence new recommendations. The model actually learns your team’s coding style over time.

Devstral Small Runs Locally

Not everyone operates data centers. Mistral gets that.

Devstral Small ships at 24 billion parameters. That’s light enough to run on consumer hardware. Developers can deploy it locally without cloud infrastructure costs.

The two models use different open source licenses too. Devstral 2 operates under a modified MIT license. Devstral Small uses Apache 2.0. Both choices signal Mistral’s commitment to open development, even if the licenses differ.

Right now, Devstral 2 is free through Mistral’s API. Once that trial period ends, pricing kicks in at $0.40 per million input tokens and $2.00 per million output tokens. Devstral Small costs less: $0.10 input, $0.30 output per million tokens.

Vibe CLI Brings AI Into Your Terminal

Mistral Vibe represents their bigger bet. It’s a command-line interface that translates natural language into code operations.

Want to search your codebase for specific patterns? Describe what you’re looking for. Need to manipulate files based on complex criteria? Explain your goal. Vibe handles the actual commands.

The tool remembers your history. Each session builds on previous interactions. That persistent memory means you’re not constantly re-explaining your project structure or goals.

Mistral partnered with Kilo Code and Cline to integrate Devstral 2. Plus, Vibe CLI works inside Zed as an IDE extension. So developers can stay in their familiar environment while accessing AI assistance.

European AI Finally Gets Competitive

Mistral raised a massive Series C in September. Dutch semiconductor giant ASML led the round with €1.3 billion ($1.5 billion). That pushed Mistral’s valuation to €11.7 billion (roughly $13.8 billion).

Those numbers matter. American AI labs like Anthropic and OpenAI dominated coding assistants until now. Europe needed a homegrown competitor that could actually challenge Silicon Valley’s lead.

This launch follows Mistral’s recent Mistral 3 family release. The company is clearly accelerating product development. They’re not trying to beat OpenAI at everything. Instead, they’re picking specific battles—like coding tools—where context awareness provides real advantages.

But timing creates pressure. Cursor already captured mindshare among developers. GitHub Copilot remains dominant in enterprise settings. Mistral enters a crowded market where switching costs are low and developer loyalty shifts quickly.

Does Context Actually Win?

Here’s what bugs me. Every AI company now claims their context awareness changes everything. Mistral’s no different.

Yet context awareness only matters if it tangibly improves code quality or developer speed. So far, most “context-aware” tools still hallucinate imports, suggest deprecated patterns, or miss obvious bugs.

Mistral’s focus on production-grade workflows sounds promising. Their hardware requirements prove they’re serious about performance. But demanding four H100 GPUs excludes most potential users right from launch.

The real test comes when developers actually use these tools daily. Can Devstral 2 understand complex codebases better than Cursor? Does Vibe CLI truly save time compared to GitHub Copilot? Those questions determine success, not parameter counts or funding rounds.

European tech needs wins. Mistral has the resources and talent to compete globally. Whether they execute fast enough to matter—that’s still uncertain.

Comments (0)