India just handed Instagram, X, Facebook, and YouTube an ultimatum. Label every piece of AI-generated content by February 20th. That’s nine days from now.

The problem? The technology to actually do this doesn’t work yet. Not even close.

What India’s New Rules Demand

India’s updated Information Technology Rules don’t mess around. Social platforms must now deploy technical measures to block illegal deepfakes before they’re posted. Anything that slips through must carry permanent metadata showing it’s AI-generated.

Plus, platforms face a brutal three-hour deadline to remove harmful content once it’s reported. That’s down from 36 hours. So companies need systems that can detect, verify, and remove deepfakes faster than most people respond to work emails.

The rules specifically target social media companies. They must make users disclose AI content. They must verify those disclosures. And they must label synthetic materials so prominently that anyone can spot them instantly.

Here’s the catch. Nobody’s figured out how to make this work reliably.

Why This Matters Beyond India

India isn’t just another market. It’s home to over 500 million social media users across platforms like YouTube, Instagram, Facebook, and Snapchat. That makes it one of tech’s most critical growth regions.

Any deepfake detection system that works in India could reshape content moderation globally. But if platforms can’t meet this deadline, it exposes just how unprepared we are for the synthetic content explosion.

The stakes are massive. India has 1 billion internet users, many of them young. If tech companies can’t protect them from deepfakes, what hope do other countries have?

Current Detection Tech Isn’t Ready

Meta, Google, Microsoft, and others already use C2PA content credentials. This system attaches invisible metadata to images, videos, and audio files, describing exactly how each piece was created or edited.

Sounds perfect, right? Except it’s failing spectacularly.

Facebook and Instagram do add labels to C2PA-flagged content. But those labels are tiny and easy to miss. Meanwhile, synthetic content without the proper metadata sails through completely undetected.

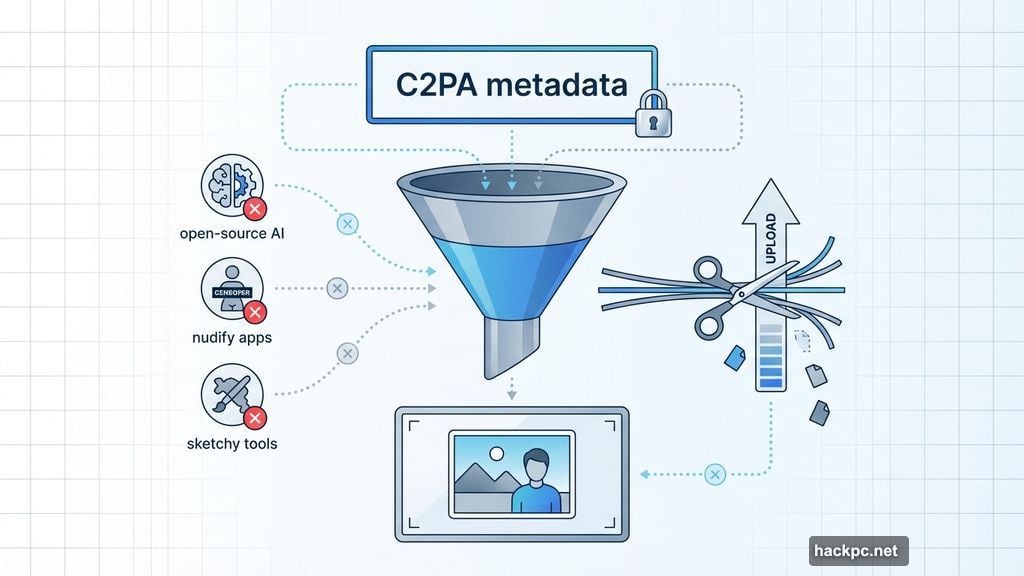

The system breaks down at multiple points. Open-source AI models don’t embed C2PA data. Neither do “nudify apps” and other sketchy tools that refuse voluntary standards. So platforms can’t label what they can’t detect.

Plus, C2PA metadata is laughably easy to strip out. Some platforms accidentally remove it during normal file uploads. India’s rules demand that labels can’t be modified or hidden. But there’s no clear path to making that happen in nine days.

The Three-Hour Removal Problem

Beyond labeling, India introduced another challenge. Platforms must now remove illegal content within three hours of discovery.

The Internet Freedom Foundation warns this creates “rapid fire censors.” There’s simply no time for meaningful human review at that speed. So companies will lean heavily on automated systems that historically over-remove legitimate content.

Think about the workflow. Someone reports a deepfake. The platform has three hours to verify it’s actually synthetic, confirm it violates rules, and delete it from potentially thousands of shares across the network. That’s assuming the detection works perfectly every time.

It won’t.

Why C2PA Keeps Failing

C2PA backers have repeated the same promise for years. The system will work once enough people adopt it. But mass adoption hasn’t solved the fundamental problems.

Interoperability remains a nightmare. Different platforms handle metadata differently. Some strip it entirely. Others display it inconsistently. And plenty of AI tools never add it in the first place.

The “permanent metadata” that India demands isn’t actually permanent. Screenshots remove it. File conversions remove it. Even basic image editing can strip provenance data completely.

Moreover, C2PA only works for content created after the standard launched. The internet is drowning in synthetic media from before 2023 that will never carry those markers.

What Happens Next

X hasn’t implemented any AI labeling system at all. Neither have several smaller platforms popular in India. They now have nine days to build something from scratch.

Meta, Google, and X declined to comment on how they’ll meet the deadline. Adobe, the company behind C2PA, also stayed silent. That’s not encouraging.

India’s rules do include a caveat. Platforms must implement provenance mechanisms to the “extent technically feasible.” That language suggests regulators understand current tech isn’t ready. But it doesn’t help companies figure out what “reasonable” compliance looks like.

The optimistic view? This deadline forces innovation. Maybe platforms finally crack AI detection under pressure. The realistic view? Companies will deploy half-working systems that flag legitimate content while missing actual deepfakes.

The Bigger Picture Nobody Wants to Face

Here’s what really bothers me about this situation. Every major tech company has spent years promising they’d solve deepfakes voluntarily. They formed coalitions. They announced standards. They published research papers.

And now, when forced to actually deliver, it’s clear those promises were mostly theater.

The technology isn’t ready because companies didn’t invest enough in making it ready. They bet on voluntary compliance and industry cooperation. That gamble failed.

So now India is calling their bluff. Either prove your detection systems work, or admit they don’t. There’s no more hiding behind “we’re making progress” or “adoption is growing.”

February 20th arrives whether platforms are ready or not. And 500 million Indian users deserve better than systems that don’t work.

Comments (0)