Instagram’s head Adam Mosseri dropped a truth bomb to close out 2025. His message? The era of trusting what you see online just ended.

Mosseri posted a 20-slide breakdown explaining how “infinite synthetic content” makes it impossible to tell real from fake anymore. Plus, he revealed that the old Instagram feed everyone remembers has been “dead for years.” This isn’t just another tech exec handwringing about AI. It’s the platform boss admitting we’re entering uncharted territory.

Photographs Stop Being Proof

For decades, photos served as evidence. You saw an image and assumed it captured something real. Not anymore.

Mosseri admits the obvious. “For most of my life I could safely assume photographs or videos were largely accurate captures of moments that happened. This is clearly no longer the case and it’s going to take us years to adapt.”

So humanity needs to rewire its brain. We evolved to trust our eyes. Now we’re shifting to skepticism by default. Instead of believing what we see, we’ll question who shared it and why they did.

That’s uncomfortable. Our genetics push us to believe visual evidence. But AI image generation got so good that realistic fakes require zero skill to create. Therefore, every photo deserves doubt until proven authentic.

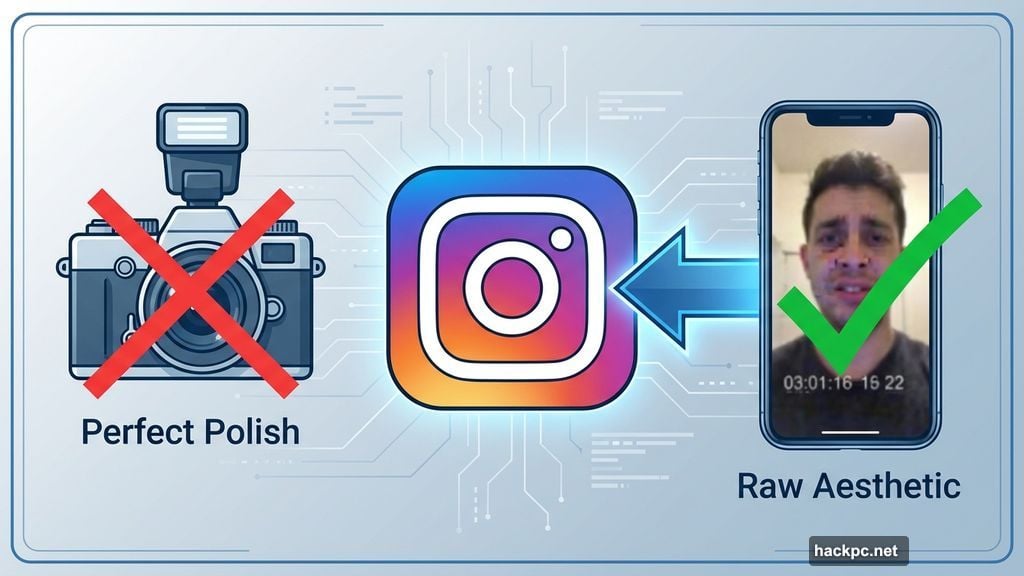

The Raw Aesthetic Becomes Defense

Here’s where things get interesting. Mosseri claims camera companies are betting wrong.

Traditional camera makers optimize for one goal: make everyone’s photos look professionally shot. Smooth skin, perfect lighting, flawless composition. They want your snapshots to match pro photographer quality from 2015.

But that’s backwards now. In fact, polished imagery became the telltale sign of AI generation. When everything can be perfect, perfection signals fakeness.

Smart creators already adapted. They’re leaning into blurry shots, unflattering angles, and raw aesthetics. Imperfection proves authenticity. That shoe-level selfie with bad lighting? It reads as real precisely because it’s terrible.

Instagram’s own usage patterns confirm this shift. The old feed of perfectly curated square photos died years ago. Now people share in DMs: shaky videos, candid shots, unfiltered moments. Rawness replaced polish.

AI Will Copy Imperfection Too

Don’t get comfortable with the “bad photo equals real photo” rule. Mosseri warns that AI will soon replicate imperfection convincingly.

Once that happens, aesthetics stop mattering. You won’t distinguish real from fake by examining image quality. So the focus shifts entirely to the source. Who posted this? Why should I trust them?

Platforms will try labeling AI content. But Mosseri admits they’ll “get worse at it over time as AI gets better.” Labels and watermarks won’t scale against improving generation tech.

The real solution? Cryptographic signing at capture. Camera manufacturers need to digitally fingerprint images the moment the shutter clicks. That creates an unbreakable chain of custody proving an image came from a real camera, not a text prompt.

Instagram Must Evolve Fast

Mosseri outlined Instagram’s response strategy. The platform needs to build better creative tools while simultaneously detecting AI slop. They’ll label generated content and verify authentic captures. Plus, they’re surfacing more context about accounts so users can evaluate credibility.

However, he glosses over Meta’s aggressive push into AI generation tools. Mosseri mentions “a lot of amazing AI content” without citing examples. Then he criticizes “AI slop” while his employer actively promotes AI content creation across its platforms.

That tension matters. Meta wants engagement regardless of source. If AI-generated posts drive clicks, the algorithm will promote them. So Instagram faces competing pressures: detect fake content while simultaneously profiting from it.

Who You Trust Beats What You See

The shift from “what” to “who” fundamentally changes content consumption. Instead of evaluating individual posts, you’ll judge entire accounts.

Does this creator have a track record? Are they transparent about their process? Do they consistently deliver? Those signals matter more than perfect composition or technical quality.

Mosseri argues this creates opportunity for real creators. In a world of infinite synthetic content, authentic voices become scarce resources. The bar rises from “can you create?” to “can you make something only you could create?”

That sounds optimistic. But it assumes platforms will successfully distinguish authentic creators from AI spam farms. Based on their track record with bots and engagement manipulation, that’s far from guaranteed.

Reality Becomes Opt-In

We’re headed somewhere weird. Photography used to be passive proof. You pointed a camera, captured reality, and everyone accepted that as evidence.

Now reality becomes something you actively verify. Was this image cryptographically signed? Does the account have credibility markers? Should I trust this source? Every piece of content requires investigation.

That’s exhausting. Most people won’t do it. They’ll fall back on tribal indicators—does this confirm what I already believe? Does it come from “my side”? Meanwhile, sophisticated AI spam will exploit those biases at scale.

Mosseri’s right that we’re “genetically predisposed to believing our eyes.” Fighting that instinct for every piece of content we consume sounds miserable. But the alternative—blindly trusting everything—seems worse.

The uncomfortable truth? We’re not ready for this shift. Platforms aren’t ready. Camera manufacturers aren’t ready. Users definitely aren’t ready. Yet here we are, tumbling into an era where nothing looks real but everything looks possible.

Comments (0)